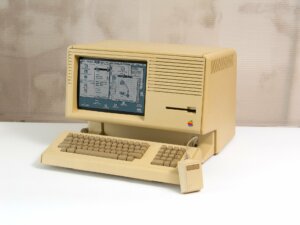

Il “bigrigio” e i suoi fratelli :Quando il telefono era indistruttibile

Laura Primiceri - 23 Gennaio 2026

FortiGate e FortiCloud SSO: quando le patch non chiudono davvero la porta

Luca Stivali - 23 Gennaio 2026

Il tuo MFA non basta più: kit di phishing aggirano l’autenticazione a più fattori

Redazione RHC - 23 Gennaio 2026

Quasi 2.000 bug in 100 app di incontri: così i tuoi dati possono essere rubati

Redazione RHC - 23 Gennaio 2026

MacSync: il malware per macOS che ti svuota il wallet… dopo settimane

Redazione RHC - 23 Gennaio 2026

Cybercrime e confusione: “The Gentlemen” prende di mira il trasporto italiano, sbaglia bersaglio?

Vincenzo Miccoli - 23 Gennaio 2026

La mente dietro le password. Imparare giocando: perché l’esperienza batte la spiegazione (Puntata 6)

Simone D'Agostino - 22 Gennaio 2026

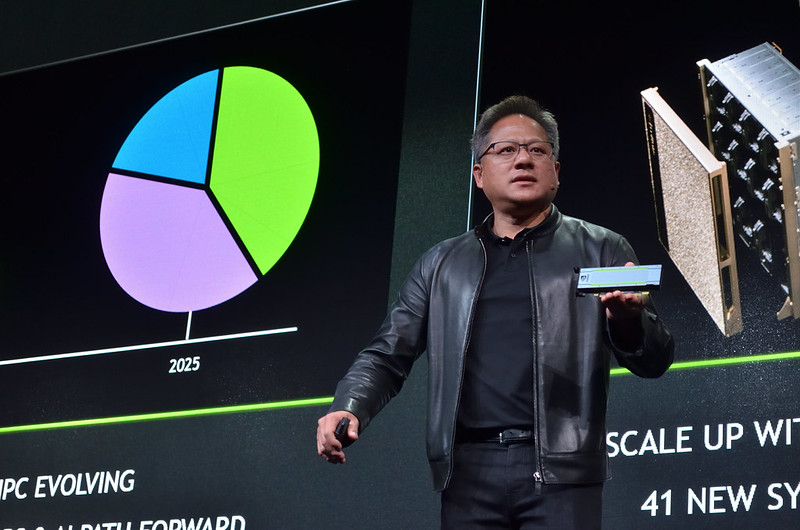

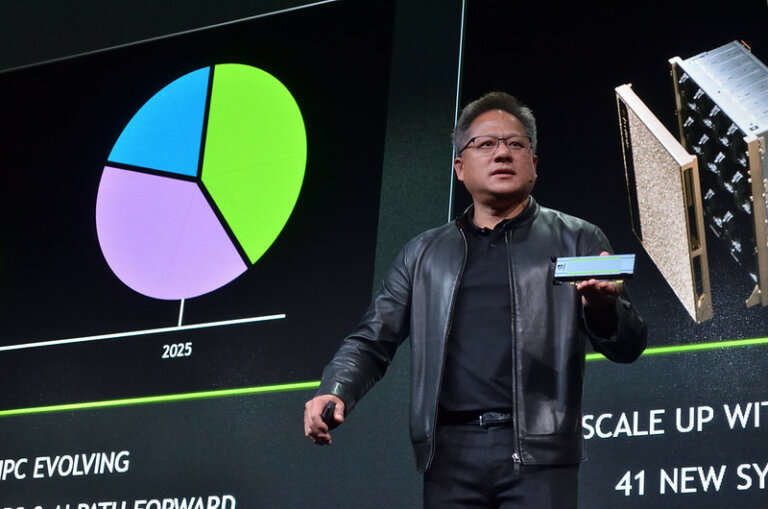

Arrivò in America con 200 dollari e finì in un riformatorio: oggi controlla il 90% dell’IA mondiale

Carlo Denza - 22 Gennaio 2026

La sottile linea rossa della responsabilità penale nella cybersecurity

Paolo Galdieri - 22 Gennaio 2026

Vulnerabilità scoperte in Foxit PDF Editor, Epic Games Store e MedDream PACS

Redazione RHC - 22 Gennaio 2026

Ultime news

Il “bigrigio” e i suoi fratelli :Quando il telefono era indistruttibile

FortiGate e FortiCloud SSO: quando le patch non chiudono davvero la porta

Il tuo MFA non basta più: kit di phishing aggirano l’autenticazione a più fattori

Quasi 2.000 bug in 100 app di incontri: così i tuoi dati possono essere rubati

MacSync: il malware per macOS che ti svuota il wallet… dopo settimane

Cybercrime e confusione: “The Gentlemen” prende di mira il trasporto italiano, sbaglia bersaglio?

Scopri le ultime CVE critiche emesse e resta aggiornato sulle vulnerabilità più recenti. Oppure cerca una specifica CVE

Ricorrenze storiche dal mondo dell'informatica

Articoli in evidenza

Vulnerabilità

VulnerabilitàNel mondo della sicurezza circola da anni una convinzione tanto diffusa quanto pericolosa: “se è patchato, è sicuro”. Il caso dell’accesso amministrativo tramite FortiCloud SSO ai dispositivi FortiGate dimostra, ancora una volta, quanto questa affermazione sia non solo incompleta, ma…

Cybercrime

CybercrimeLa quantità di kit PhaaS è raddoppiata rispetto allo scorso anno, riporta una analisi di Barracuda Networks, con la conseguenza di un aumento della tensione per i team addetti alla sicurezza”. Gli aggressivi nuovi arrivati…

Cybercrime

CybercrimeUno studio su 100 app di incontri, ha rivelato un quadro inquietante: sono state rilevate quasi 2.000 vulnerabilità, il 17% delle quali è stato classificato come critico. L’analisi è stata condotta da AppSec Solutions. I…

Innovazione

InnovazioneCome tre insider con solo 200 dollari in tasca hanno raggiunto una capitalizzazione di 5000 miliardi e creato l’azienda che alimenta oltre il 90% dell’intelligenza artificiale. Kentucky, 1972. Un bambino taiwanese di nove anni che…

Cybercrime

CybercrimeDa oltre un anno, il gruppo nordcoreano PurpleBravo conduce una campagna malware mirata denominata “Contagious Interview “, utilizzando falsi colloqui di lavoro per attaccare aziende in Europa, Asia, Medio Oriente e America Centrale. I ricercatori…