Allarme CISA: exploit in corso contro VMware vCenter. Rischio RCE senza autenticazione

Redazione RHC - 25 Gennaio 2026

Linux 7.0 dice addio alla reliquia HIPPI: eliminato un pezzo di storia del supercomputing

Silvia Felici - 25 Gennaio 2026

Lo scandalo Grok: 3 milioni di immagini a sfondo sessuale generate in 11 giorni

Agostino Pellegrino - 25 Gennaio 2026

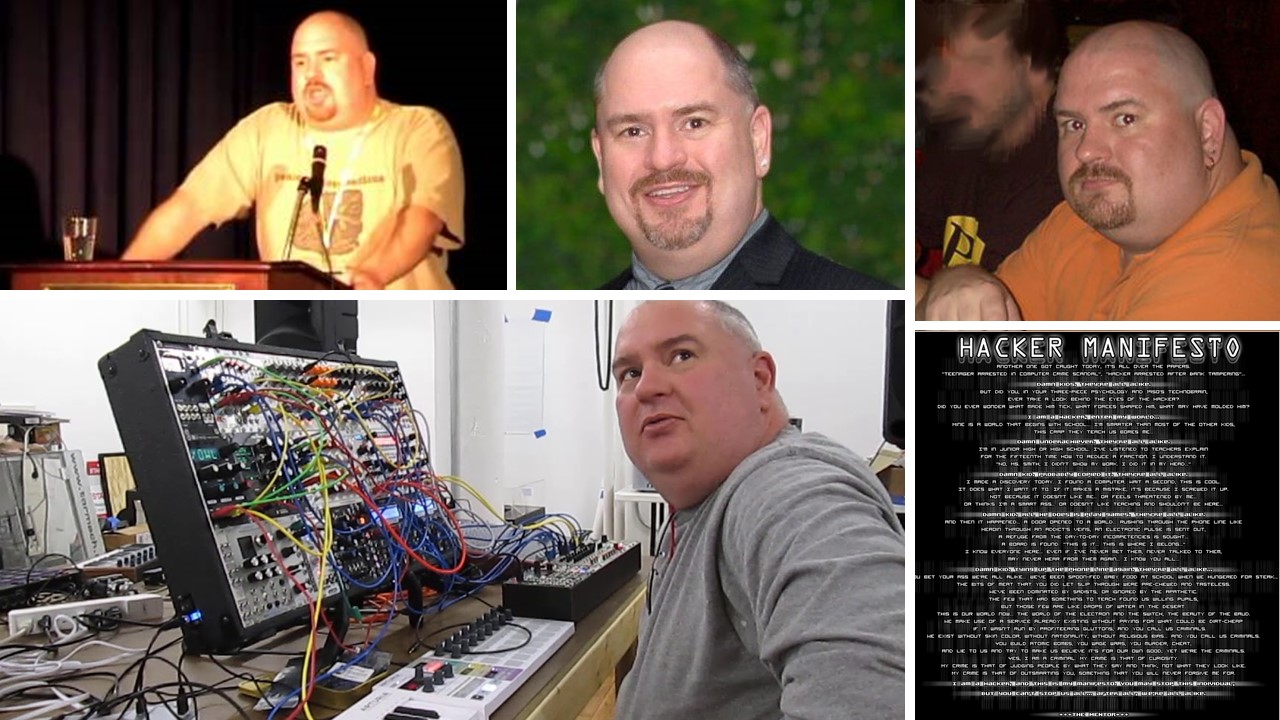

“Ho rubato 120.000 Bitcoin”: la confessione dell’hacker di Bitfinex che ora vuole difendere il cyberspazio

Agostino Pellegrino - 25 Gennaio 2026

Scuola, Sicurezza e Capacità Nazionale: perché il 2026 è prigioniero dei problemi del 1960

Roberto Villani - 24 Gennaio 2026

AGI: i CEO di Google e Anthropic lanciano l’allarme a Davos – il mondo non sarà pronto!

Redazione RHC - 24 Gennaio 2026

Un browser funzionante creato con l’AI con 3 milioni di righe di codice: svolta o illusione?

Redazione RHC - 24 Gennaio 2026

Kimwolf: la botnet IoT che si muove silenziosa tra reti aziendali e governative

Sandro Sana - 24 Gennaio 2026

NoName057(16) colpisce l’Italia 487 volte negli ultimi 3 mesi: l’ondata DDoS non si ferma

Redazione RHC - 24 Gennaio 2026

Cavi Sottomarini: allarmi ignorati e processi incerti. La verità sugli incidenti sotto il mare

Sandro Sana - 24 Gennaio 2026

Ultime news

Allarme CISA: exploit in corso contro VMware vCenter. Rischio RCE senza autenticazione

Linux 7.0 dice addio alla reliquia HIPPI: eliminato un pezzo di storia del supercomputing

Lo scandalo Grok: 3 milioni di immagini a sfondo sessuale generate in 11 giorni

“Ho rubato 120.000 Bitcoin”: la confessione dell’hacker di Bitfinex che ora vuole difendere il cyberspazio

Scuola, Sicurezza e Capacità Nazionale: perché il 2026 è prigioniero dei problemi del 1960

AGI: i CEO di Google e Anthropic lanciano l’allarme a Davos – il mondo non sarà pronto!

Scopri le ultime CVE critiche emesse e resta aggiornato sulle vulnerabilità più recenti. Oppure cerca una specifica CVE

Ricorrenze storiche dal mondo dell'informatica

Articoli in evidenza

Cyber News

Cyber NewsLa vulnerabilità critica recentemente aggiunta al catalogo delle vulnerabilità note sfruttate (KEV) dalla Cybersecurity and Infrastructure Security Agency (CISA) interessa il Broadcom VMware vCenter Server e viene attivamente sfruttata dagli hacker criminali per violare le…

Cyber News

Cyber NewsLa storia di Ilya Lichtenstein, l’hacker responsabile di uno degli attacchi informatici più grandi mai compiuti contro le criptovalute, appare come un episodio di una serie TV, eppure è assolutamente reale. Dopo essere stato rilasciato,…

Cyber News

Cyber NewsSe c’erano ancora dubbi sul fatto che le principali aziende mondiali di intelligenza artificiale fossero d’accordo sulla direzione dell’IA, o sulla velocità con cui dovrebbe arrivarci, questi dubbi sono stati dissipati al World Economic Forum…

Cyber News

Cyber NewsUna settimana fa, il CEO di Cursor, Michael Truell, ha annunciato un risultato presumibilmente straordinario. Ha affermato che, utilizzando GPT-5.2, Cursor ha creato un browser in grado di funzionare ininterrottamente per un’intera settimana. Questo browser…

Cyber News

Cyber NewsL’Italia si conferma uno degli obiettivi principali della campagna di attacchi DDoS portata avanti dal gruppo hacktivista NoName057(16). Secondo quanto dichiarato direttamente dal collettivo, il nostro Paese ha subito 487 attacchi informatici tra ottobre 2024…