On October 21, 2025, an international team of researchers from 29 leading institutions—including Stanford University, MIT, and the University of California, Berkeley —completed a study that marks a milestone in the development of artificial intelligence: the definition of the first quantitative framework for evaluating Artificial General Intelligence (AGI).

Based on the Cattell-Horn-Carroll (CHC) psychological theory, the proposed model divides general intelligence into ten distinct cognitive domains , each with a 10% weight, for a total of 100 points representing the human cognitive level.

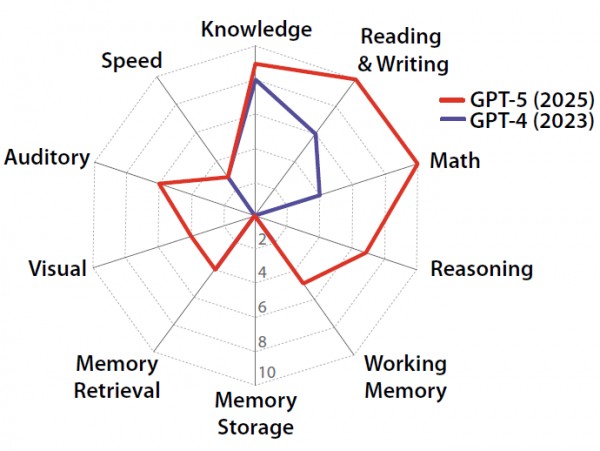

Based on this scale, GPT-4 achieved a score of 27%, while GPT-5 achieved 58% , highlighting an uneven distribution of abilities, with excellent results in language and knowledge, but zero scores in long-term memory.

According to the researchers, determining whether an AI can be considered ” intelligent ” like a human requires a broad, multidimensional assessment. Like a comprehensive medical checkup that measures the health of multiple organs, AGI is analyzed across multiple cognitive domains—from reasoning to language, from memory to sensory perception.

The new framework is based on the CHC theory, which has been used for decades in psychology to measure human cognitive abilities. This approach allows us to break down intelligence into analytical components, such as cognition, reasoning, visual processing, and memory.

The team’s goal was to transform these principles into an objective measurement system that could also be applied to artificial intelligence models.

The tests assessed GPT-4 and GPT-5 in ten areas : general knowledge, text comprehension and production, mathematics, immediate reasoning, working memory, long-term memory, memory retrieval, visual processing, auditory processing, and reaction speed.

GPT-5 showed significant improvements over its predecessor, achieving near-perfect scores in language, knowledge, and mathematics. However, both versions failed on tests of long-term memory and consistent information management over time.

According to researchers, this shows that current AI systems compensate for their shortcomings through “capability distortion” strategies, exploiting huge amounts of data or external tools to mask structural limitations.

The report describes the distribution of results as “sawtooth”: excellence in some areas and serious deficiencies in others. For example, GPT-5 behaves like a brilliant student in theoretical subjects, but unable to remember the lessons learned. This cognitive fragmentation highlights that, despite displaying advanced abilities, AIs still lack a continuous and autonomous understanding of the world.

The study’s authors compare AI to a sophisticated engine lacking some essential components. Even with a state-of-the-art linguistic and mathematical system, the lack of a stable memory and a true learning mechanism limits overall capacity. For artificial intelligence, this translates into high performance on specific tasks, but poor adaptability and autonomous learning over the long term.

In addition to providing a scientific basis for evaluating artificial intelligence, the study helps redefine expectations for AGI development. It demonstrates that simply increasing the size of models or increasing data is not enough to achieve human-like cognition: new architectures capable of integrating memory, reasoning, and experiential learning are needed.

The researchers also emphasize the importance of addressing AI’s so-called “hallucinations”—information fabrication errors—which remain a critical issue in all tested models. Awareness of these limitations can guide a more informed use of the technology, avoiding both excessive enthusiasm and unfounded fears.

Ultimately, the main contribution of this research is the introduction of a true “cognitive yardstick” to measure artificial intelligence objectively and comparable. Only by understanding its current strengths and weaknesses will it be possible to effectively guide the next generation of intelligent systems.

Follow us on Google News to receive daily updates on cybersecurity. Contact us if you would like to report news, insights or content for publication.