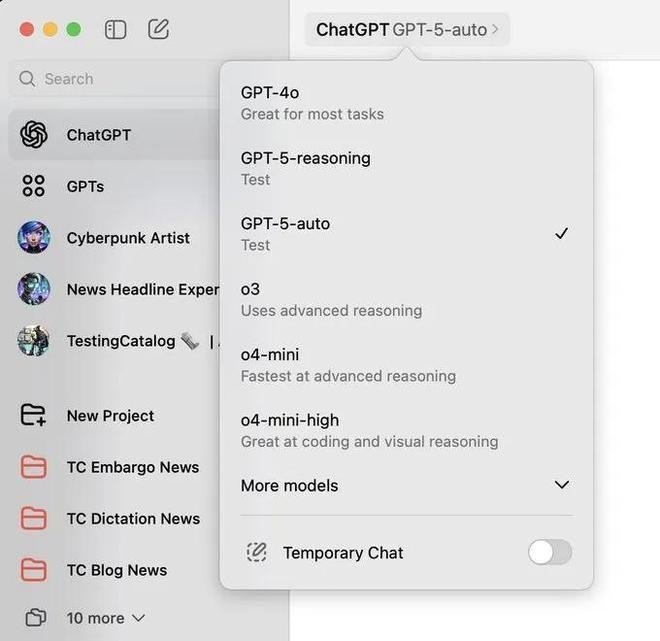

GPT-5 hasn’t even appeared yet, and internet users have started creating all kinds of memes to complain. Indeed, the rumors about GPT-5 haven’t stopped in recent days. First, some internet users found traces of the GPT-5-Auto and GPT-5-Reasoning templates in macOS’s ChatGPT application.

Then they revealed that Microsoft Copilot and Cursor were also secretly linked to test GPT-5. On August 1, The Information published a lengthy article titled “Inside OpenAI’s Rocky Path to GPT-5”, revealing further classified information about GPT-5.

GPT-5 has improved, but the performance leap isn’t as big as before. Last December, OpenAI presented the results of its Test-Time Scaling technology, a fundamental breakthrough in the capabilities of large models in the post-pretraining era.

The test demonstrated that AI performance continues to improve when given more time and computing power to process tasks. This technical approach has already proven its effectiveness in the practical application of OpenAI-o1 and DeepSeek-R1. It looks like ChatGPT users will be impressed by the powerful capabilities of this new AI. However, the excitement didn’t last long.

According to two people involved in its development, when OpenAI researchers adapted the new AI into a chat-based version, o3, capable of responding to commands from ChatGPT users, the performance gains over previous benchmarks largely disappeared.

This is just one example of the many technical challenges OpenAI has faced this year. The mounting difficulties are slowing the pace of AI development and could even impact the business of ChatGPT, a successful AI application.

With With the upcoming release of GPT-5, OpenAI researchers are said to have found a solution. According to sources and internal OpenAI engineers, OpenAI’s next flagship AI model, GPT-5, will have significantly improved capabilities in programming, mathematics, and other areas.

One source said the new model is better at adding functionality while writing application code (“vibe coding”), making it easier to use and more aesthetically pleasing. He also said that GPT-5 is better than its predecessor at guiding AI agents to handle complex tasks with minimal human supervision. For example, it can follow complex instructions and a list of rules to determine when automated customer service should issue a refund, whereas previous models had to first test several complex cases (e.g., edge cases) before processing such a refund.

Another person familiar with the matter said the improvements cannot match the performance leaps seen in previous GPT models, such as the improvements between GPT-3 in 2020 and GPT-4 in 2023. The slowdown in performance gains OpenAI has experienced over the past 12 months suggests it may struggle to overtake its main rival, at least in terms of AI capabilities.

OpenAI’s current models have already created significant business value through ChatGPT and various applications, and incremental improvements will also increase customer demand. These improvements will also give investors the confidence to fund OpenAI’s plan to invest $45 billion over the next three and a half years to purchase GPUs for product development and execution.

Improving automated coding capabilities has become OpenAI’s top priority. Recent progress also helps explain why OpenAI executives have told some investors in recent weeks that they believe the company can achieve its “GPT-8” goal. This statement is consistent with CEO Sam Altman’s public statements that OpenAI, with its current technical expertise, is well on its way to creating artificial general intelligence (AGI) that rivals the capabilities of the smartest humans.

While we’re still a long way from achieving AGI, the soon-to-be-released GPT-5 model may have some appeal beyond better coding and reasoning. Microsoft has exclusive rights to use OpenAI’s intellectual property, and some company executives told employees that model tests showed that GPT-5 can generate higher-quality code and other text-based responses without consuming more processing resources, according to a Microsoft employee familiar with the matter. This is partly because, compared to previous models, it is better at assessing which tasks require relatively more or less processing resources, the person said. OpenAI’s internal assessment shows that improving AI’s ability to automatically perform coding tasks has become OpenAI’s top priority after competitor Anthropic took the lead last year in developing and selling such models to software developers and coding assistants like Cursor.

OpenAI’s progress has not been easy, as its researchers and management have faced new pressures this year. First and foremost is the delicate relationship with Microsoft. Although Microsoft is OpenAI’s largest external shareholder and has the right to use some of OpenAI’s technologies until 2030 under a contractual agreement between the two parties, some senior OpenAI researchers are reluctant to cede their innovations and inventions to Microsoft.

Microsoft and OpenAI also have a very close financial relationship, but there are disputes over the specific terms of the cooperation, and both sides are demanding some concessions from the other. OpenAI hopes to prepare for a future IPO by restructuring its for-profit division. While some details remain uncertain, both sides have reached a preliminary consensus on some important aspects, such as Microsoft’s potential acquisition of approximately 33% of OpenAI’s equity after the restructuring.

The second is that the poaching of engineers continues. Meta recently spent significant money poaching more than a dozen researchers from OpenAI, some of whom had participated in OpenAI’s recent work on improving the technology. These talent losses and the resulting staffing adjustments are putting pressure on OpenAI’s management.

Last week, Jerry Tworek, OpenAI’s vice president of research, complained to his boss, Mark Chen, about the team changes in an internal Slack message, which was seen by many colleagues. Tworek said he had to take a week off to reassess the situation, but he did not use it afterward.

Although OpenAI has made some commercial progress, some concerns remain within the company about its ability to continue to improve AI and maintain its leadership position, especially in the face of well-funded competitors.

In the second half of 2024, OpenAI developed a model called Orion, which it initially planned to release as GPT-5, anticipating that its performance would outperform the existing GPT-40 model. However, Orion failed to achieve the expected improvement, so OpenAI released it as GPT-4.5, which ultimately failed to have a significant impact.

Orion’s failure is partly due to limitations in the pre-training phase. Pre-training is the first step in model development, which requires processing large amounts of data to understand the connections between concepts. Faced with a shortage of high-quality data, OpenAI also found that optimizations made to the Orion model, while effective when the model was small, became ineffective as the size increased.

Follow us on Google News to receive daily updates on cybersecurity. Contact us if you would like to report news, insights or content for publication.