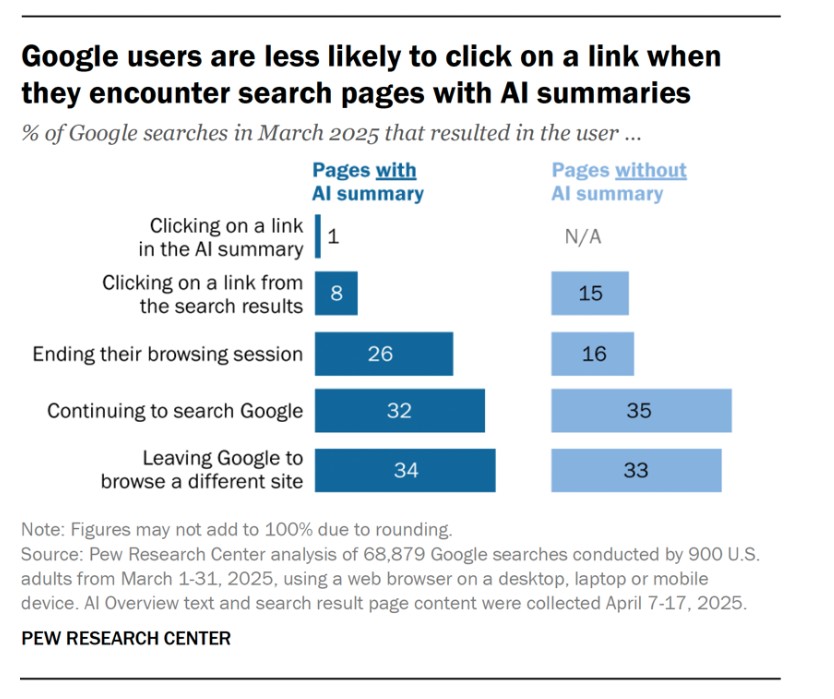

Google is turning its search engine into a showcase for artificial intelligence, and that could spell disaster for the entire digital economy. According to a new study from the Pew Research Center, only 1% of searches featuring an AI-powered summary end up clicking through to the original source. This means that the vast majority of users don’t even visit the sites where their information comes from.

The AI Overview feature was introduced in 2023 and quickly began to dominate search results, replacing the traditional “10 blue links” model. Instead of real-time text from journalists and bloggers, users receive an algorithmically generated summary. The problem is that these summaries not only reduce traffic to sites, but often lead to other, less reliable sources.

This is what happened with 404 Media’s investigation into AI-generated tracks on behalf of deceased artists. Despite Spotify’s protests and subsequent actions, Google searches initially showed an AI-generated summary based on a secondary blog, dig.watch, rather than the original material. In AI Overview mode, the 404 Media article didn’t appear at all, but only on aggregators TechRadar, Mixmag, and RouteNote.

Original content producers are losing readers, revenue, and the ability to operate sustainably. Even quality content is drowning in repackaged information created without human intervention. The creation of fake AI aggregators has become ubiquitous: they get traffic without investing in journalism.

To make matters worse, Panoramic AI is easy to fool. Artist Eduardo Valdés-Hevia conducted an experiment by posting a fictitious theory of parasitic encephalization. Within hours, Google began to display it as scientific fact. He then coined the term “AI Traffic Jam” – and once again, he got the same result. Later, it managed to mix real diseases with fictional ones, such as Dracunculus graviditatis—and the AI, once again, failed to distinguish between fiction and reality.

More examples: Google telling users to eat glue because of a joke on Reddit, or reporting the death of still-living journalist Dave Barry. The algorithm doesn’t recognize humor, satire, or lies, but presents them with absolute certainty. The danger lies not only in errors, but also in scalability. As Valdés-Hevia observes, a few forum posts with “scientific” language are enough for a lie to be passed off as truth. Google thus unwittingly becomes a tool for spreading misinformation.

The problem is systemic. Search traffic, which has long been the basis of the survival of media and blogs, is disappearing. SEO optimization no longer works, and small businesses, like large media outlets, are suffering losses. Instead of increased competition, we are seeing a centralized flow of errors and misinformation, reinforced by trust in the Google brand. Some companies are offering alternatives: ad-free search engines and AI-powered content filters. But as long as Google remains the standard, users won’t get what they’re looking for, but what the algorithm decides to show.

In an official commentary, Google called Pew’s methodology “unrepresentative” and noted that “it redirects billions of clicks every day.” But the data tells a different story: with AI-powered reviews, people are opening fewer websites.

And this is slowly but surely destroying the ecosystem of human knowledge on the Internet.

Follow us on Google News to receive daily updates on cybersecurity. Contact us if you would like to report news, insights or content for publication.