L’intelligenza artificiale (IA) è una disciplina che mira a sviluppare sistemi in grado di emulare alcune delle capacità cognitive umane.

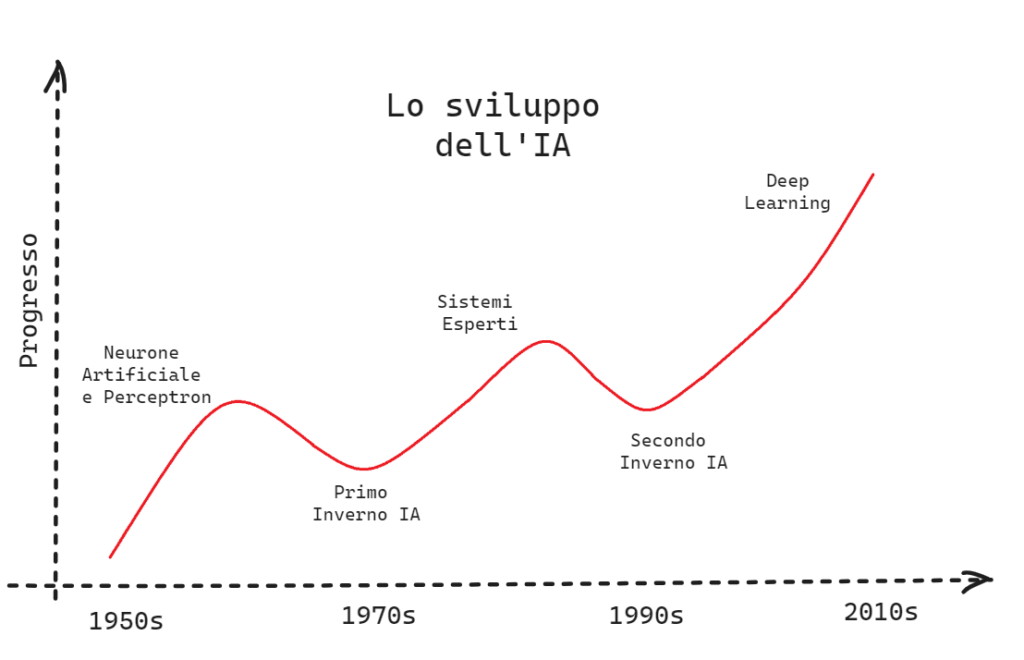

Nel corso degli anni, l’IA ha attraversato diverse fasi, con periodi di fervida attività seguiti da momenti di rallentamento. In questo articolo esploreremo la storia dell’intelligenza artificiale e i suoi momenti cruciali.

Negli anni ’50 e ’60, numerosi scienziati e ricercatori hanno contribuito alla fondazione dell’IA, beneficiando di investimenti governativi che hanno sostenuto la ricerca in questo campo. Alcuni dei punti salienti che hanno segnato la nascita dell’intelligenza artificiale:

Negli anni ’70, l’IA si trovò ad affrontare diverse sfide che ne rallentarono il progresso. Uno degli eventi significativi fu l’affair XOR, presentato da Marvin Minsky e Seymour Papert nel loro libro “Perceptrons” del 1969, che evidenziò i limiti dei modelli di perceptron nel risolvere problemi complessi, come replicare il comportamento della funzione XOR. In particolare, si dimostrò che un singolo strato di unità connesse può classificare solo input che possono essere separati da una linea retta o da un iperpiano.

Un altro evento rilevante che contribuì al rallentamento dell’IA fu la pubblicazione del Rapporto ALPAC nel 1966, redatto dall’omonimo comitato Automatic Language Processing Advisory Committee. Il comitato evidenziava le limitazioni dell’IA nel campo della traduzione automatica dal russo all’inglese. La traduzione automatica era un obiettivo strategico per gli Stati Uniti, ma il rapporto sottolineava che, nonostante gli sforzi dedicati, i sistemi di traduzione automatica avevano ottenuto risultati deludenti.

Queste analisi, insieme a un basso ritorno di investimenti, portarono a mettere in discussione l’efficacia delle reti neurali artificiali e ad un periodo di scetticismo e disillusione nei confronti dell’IA. Tuttavia, è importante sottolineare che il primo “inverno” dell’IA ha comportato anche importanti lezioni apprese. I limiti dei perceptron hanno evidenziato la necessità di sviluppare modelli di intelligenza artificiale più complessi e adattabili. Inoltre, questo periodo di riflessione ha contribuito ad una maggiore consapevolezza delle sfide e dei problemi che accompagnano lo sviluppo dell’IA.

Negli anni ’80 si è verificata una ripresa significativa della ricerca e dello sviluppo nell’ambito dell’IA, guidata principalmente dall’IA simbolica. Questa branca dell’apprendimento automatico si concentra sulla rappresentazione della conoscenza e sull’utilizzo di regole e inferenze per affrontare problemi complessi. I sistemi esperti e knowledge-based sono stati sviluppati come strumenti per risolvere problemi complessi sfruttando basi di conoscenza rappresentate principalmente come regole if-then. Un esempio notevole è SHRDLU, sviluppato da Terry Winograd. SHRDLU era un programma di IA che interagiva con il mondo attraverso il linguaggio naturale, dimostrando una certa comprensione del contesto e della semantica, nonostante fosse basato su approcci rule-based.

Oltre ai sistemi esperti, un’altra pietra miliare di questo periodo è stata Stanford Cart, un carrello sviluppato a Stanford in grado di eseguire compiti fisici complessi, come il trasporto di oggetti in un ambiente con ostacoli. Questo ha dimostrato la capacità dei robot di manipolare oggetti fisici in modo autonomo, aprendo nuove prospettive per l’applicazione pratica dell’IA. Puoi vedere The Stanford Cart attraversare una stanza evitando ostacoli nel video di seguito!

Gli anni ’80 sono stati anche caratterizzati dallo studio teorico di algoritmi fondamentali per l’IA, come l’algoritmo di back-propagation, reso popolare nel 1986 da uno dei padri della moderna AI, Geoffrey Hinton. Questo algoritmo, che sfrutta la retroazione dell’errore nei processi di apprendimento, ha migliorato la capacità delle reti neurali di adattarsi ai dati, aprendo nuove possibilità per l’IA. Approfondisci con questa lezione di Hinton!

Nonostante i notevoli progressi, verso la fine degli anni ’80 e l’inizio degli anni ’90, l’IA ha affrontato alcune sfide e rallentamenti. In particolare, i sistemi esperti presentano diversi limiti, tra cui la loro dipendenza dalle informazioni fornite inizialmente e l’adattabilità limitata tra domini. Inoltre, la manutenzione e l’aggiornamento della base di conoscenza richiedono sforzi e costi significative. Ciò portò a tagliare gli investimenti nella ricerca sull’IA, che all’epoca era ancora fortemente vincolata a fondi governativi.

Negli ultimi decenni, l’Intelligenza Artificiale ha vissuto una notevole rinascita grazie all’esplosione del machine learning (ML) e del deep learning (DL). Alcuni dei principali sviluppi di questo periodo:

Introduzione delle reti neurali multi-layer: Un punto di svolta nell’IA è stato raggiunto nel 2015 con l’introduzione delle Deep Neural Networks. Modelli come AlexNet hanno aperto la strada a reti neurali in grado di apprendere rappresentazioni complesse dai dati. Il DL ha rivoluzionato molti campi, come l’elaborazione del linguaggio naturale e la computer vision.

Hardware GPU: L’incremento della potenza di calcolo è stato un fattore cruciale per l’esplosione dell’IA. I progressi nella tecnologia hardware, in particolare l’introduzione dei processori grafici ad alte prestazioni (GPU), hanno fornito alle macchine le risorse necessarie per l’addestramento di modelli complessi.

La competizione annuale di classificazione ILSVRC: Lanciata nel 2010, ha avuto un ruolo fondamentale nello stimolare il progresso nell’elaborazione delle immagini. La competizione è legata al dataset ImageNet, con oltre 15 milioni di immagini appartenenti a più di 20.000 classi. Grazie ai metodi di DL, l’errore di classificazione è stato significativamente ridotto, aprendo nuove prospettive nel campo della visione artificiale.

Comunità IA: L’evoluzione dell’IA è stata favorita dalla condivisione delle conoscenze. L’apertura del codice, dei dataset e delle pubblicazioni scientifiche ha giocato un ruolo cruciale. Framework open source come TensorFlow e PyTorch, supportati dalle principali aziende IT (Google e Facebook), hanno dato impulso allo sviluppo. Questo ha consentito a ricercatori e sviluppatori di accedere a strumenti e risorse per sperimentare. Le competizioni di ML, sono diventate piattaforme di riferimento per la condivisione di modelli, l’acquisizione di dataset e la collaborazione tra ricercatori.

Investimenti privati: i colossi hi-tech hanno riconosciuto il potenziale dell’IA nel trasformare i loro prodotti e servizi, nonché nell’aprire nuove opportunità di business. Di conseguenza, hanno stanziato fondi considerevoli per la ricerca e lo sviluppo nell’ambito dell’IA.

La storia dell’IA è caratterizzata da periodi di entusiasmo e scoperte seguiti da momenti di sfida e rallentamenti. Tuttavia, negli ultimi decenni, l’IA ha fatto progressi significativi grazie all’evoluzione del machine learning e del deep learning. L’IA simbolica ha aperto la strada alla rappresentazione della conoscenza e all’uso di regole e inferenze, mentre il machine learning e il deep learning hanno rivoluzionato l’approccio all’apprendimento automatico, consentendo alle macchine di apprendere da grandi quantità di dati. Mentre continuiamo a esplorare le possibilità dell’IA, è fondamentale considerare anche le sfide etiche e sociali che accompagnano il suo sviluppo e utilizzo. L’IA offre grandi promesse, ma è necessario affrontare attentamente questioni come la privacy, la sicurezza, la responsabilità e l’impatto sociale per garantire un utilizzo responsabile e benefico dell’intelligenza artificiale. Il gruppo IA di RHC si pone l’obiettivo di scrivere contenuti divulgativi, con approfondimenti sui temi discussi in questo articolo e molto altro! Maggiori dettagli sui prossimi articoli possono essere trovati qui, stay tuned!

Ti è piaciuto questo articolo? Ne stiamo discutendo nella nostra Community su LinkedIn, Facebook e Instagram. Seguici anche su Google News, per ricevere aggiornamenti quotidiani sulla sicurezza informatica o Scrivici se desideri segnalarci notizie, approfondimenti o contributi da pubblicare.

Cybercrime

CybercrimeLe autorità tedesche hanno recentemente lanciato un avviso riguardante una sofisticata campagna di phishing che prende di mira gli utenti di Signal in Germania e nel resto d’Europa. L’attacco si concentra su profili specifici, tra…

Innovazione

InnovazioneL’evoluzione dell’Intelligenza Artificiale ha superato una nuova, inquietante frontiera. Se fino a ieri parlavamo di algoritmi confinati dietro uno schermo, oggi ci troviamo di fronte al concetto di “Meatspace Layer”: un’infrastruttura dove le macchine non…

Cybercrime

CybercrimeNegli ultimi anni, la sicurezza delle reti ha affrontato minacce sempre più sofisticate, capaci di aggirare le difese tradizionali e di penetrare negli strati più profondi delle infrastrutture. Un’analisi recente ha portato alla luce uno…

Vulnerabilità

VulnerabilitàNegli ultimi tempi, la piattaforma di automazione n8n sta affrontando una serie crescente di bug di sicurezza. n8n è una piattaforma di automazione che trasforma task complessi in operazioni semplici e veloci. Con pochi click…

Innovazione

InnovazioneArticolo scritto con la collaborazione di Giovanni Pollola. Per anni, “IA a bordo dei satelliti” serviva soprattutto a “ripulire” i dati: meno rumore nelle immagini e nei dati acquisiti attraverso i vari payload multisensoriali, meno…