#OpChildSafety: come in ogni storia, c’è sempre di più di quello che un lettore o uno spettatore può vedere e soprattutto conoscere. Questa storia, infatti, riguarda la scoperta di un’enorme rete di materiale CSAM (Child Sexual Abuse Material) da cui è emerso un mostro dalle mille teste, Hydra e chi lo ha fatto sono l’Electronik Tribulation Army (ETA) e il conglomerato W1nterSt0rm, un gruppo di OSINT e intelligence sulle minacce, impegnato ad educare i suoi membri per poi combattere i predatori sessuali online che prendono di mira i bambini.

Questa non è solo la loro storia, ma riguarda la scoperta di un’enorme rete di materiale CSAM (Child Sexual Abuse Material) da cui è emerso un mostro dalle mille teste, Hydra.

La storia che sta dietro alla campagna #OpChildSafety (Operation Child Safety) ha una lunga storia su Internet: molti di voi si ricorderanno l’operazione #OpPedoHunt, creata da Anonymous per proteggere chi non ha ancora sviluppato la capacità di decidere o valutare il mondo, per ricordare che certe “guerre” – se basate su regole morali ed etiche – valgono ancora la pena di essere combattute, quando c’è chi – parliamo soprattutto di ragazzi in età pre-adolescenziale – rischia di diventare oggetto o merce di una potenziale rete tentacolare che diventa, in alcuni casi, un vero e proprio business, come dimostrano i milioni di video e foto pedopornografici presenti su Internet.

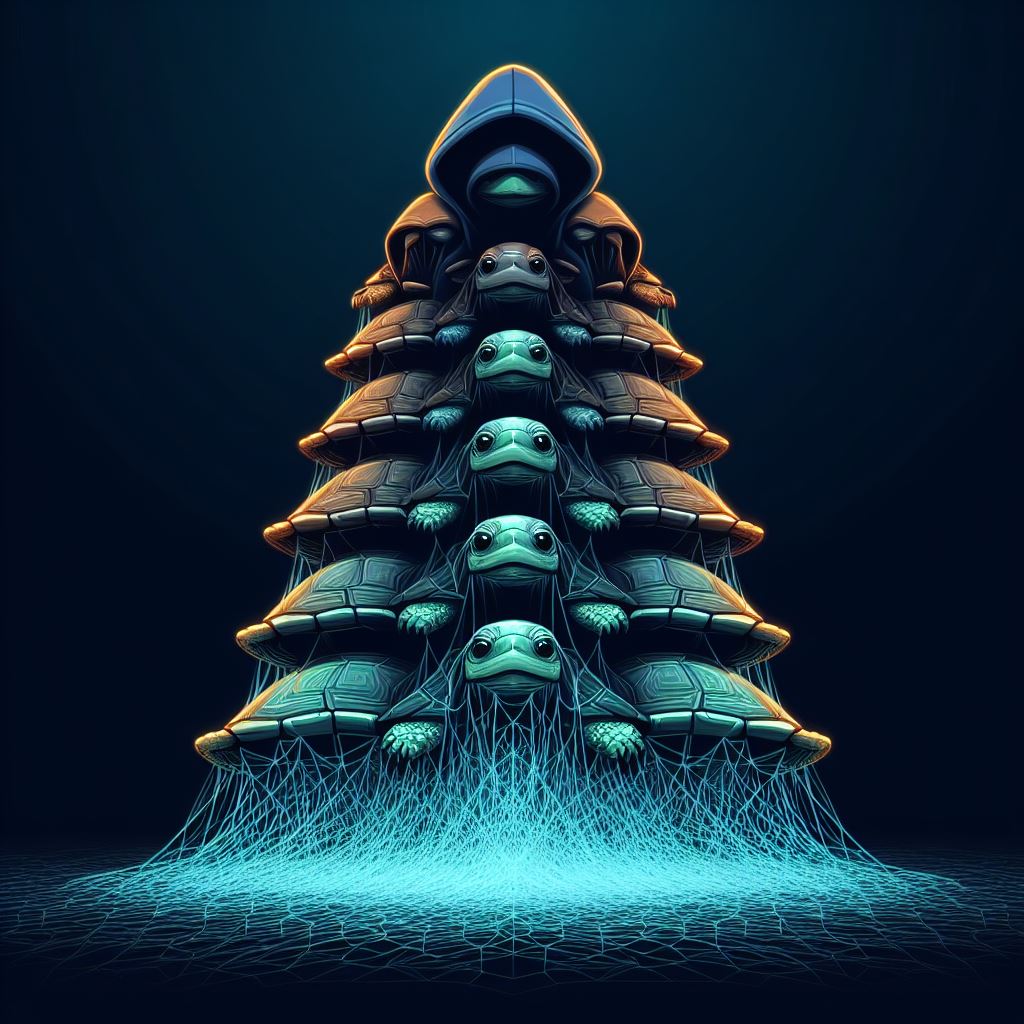

Ora mi viene in mente la storia di “Turtles all the way down” (Tartarughe all’infinito): un’espressione del problema del regresso infinito, basata sull’idea mitologica di una grande tartaruga che regge tutte le altre in una colonna infinita per sostenere il mondo. Ebbene, man mano che ETA-W1nterSt0rm scavava – e scava ancora – sempre più, si è aperta grande voragine oscura, che costringe ogni giorno a rivalutare e riformulare le premesse della partenza. Ma prima di andare avanti devo porre un’importante domanda importante a Ghost Exodus.

O: “Prima di raccontare questa storia, puoi spiegarci qual è la cultura dietro ETA-W1nterSt0rm, e quali sono le regole etiche e morali, che regolano la caccia ai predatori contro l’uso di tecniche di hacking illegali e, soprattutto, perché è importante ricordarlo?”

Ghost Exodus: “Non esiste una risposta breve a questa domanda. Ho visto Anonymous fare sempre le stesse cose, aspettandosi risultati diversi quando si tratta delle loro iniziative per la sicurezza online dei bambini. In molti casi, ho visto gruppi fare molte affermazioni fuorvianti riguardo al loro successo nell’eliminare le minacce contro i bambini. Ho visto che svolgevano questo tipo di lavoro per ambizioni egoistiche e esponevano loro stessi e gli altri al rischio di essere arrestati.

Non tutti, ma la maggior parte di questi gruppi non sembrano comprendere il danno che stanno causando al lavoro investigativo, che invece potrebbe aiutare ad arrestare le persone che alimentano il mostro che è lo CSAM. Una persona fa una denuncia alla Internet Watch Foundation (IWF) o al National Center for Missing and Exploited Children (NCMEC), un’altra lancia attacchi DDoS ai siti web appena segnalati, minando qualsiasi impegno investigativo. Ancora, un altro segnala account per farli bannare, pensando che stiano rendendo un buon servizio al mondo, mentre non fanno nulla di significativo per far arrestare gli operatori CSAM e altri fanno doxing pubblico su obiettivi, che in realtà avvisano gli obiettivi che sono stati scoperti. È un caos inutile.

Non credo che la maggior parte di questi cacciatori di pedofili capisca quanto sia pericoloso questo territorio. Ho trascorso 11 anni in una prigione federale per pirateria informatica e sono stato costretto a convivere con pedofili e uomini che contribuivano ad alimentare il mostro CSAM. Allo stesso modo, capisco bene questo tipo di casi criminali e il modo in cui l’FBI li cattura, il che può mettere a rischio i cacciatori di pedofili onesti. Pertanto, W1nterSt0rm ha dovuto creare una cultura che guidasse i cacciatori a proteggerli e insegnasse loro come massimizzare potenzialmente la loro efficacia. Pertanto, abbiamo sviluppato leggi chiamate “La Carta“ o atto costitutivo. È attraverso queste leggi che aiutiamo a guidare gli altri cacciatori che cercano direzione e guida. #WeGuardTheCharter”.

Internet è diventato un posto incerto e spesso pericoloso per i ragazzi soprattutto in un’età pre-adolescenziale. Ebbene, tutto ciò non ci sarebbe nulla di male se i predatori, sempre in agguato, non avessero precise strategie di adescamento e se gli stessi social media adottassero misure efficaci nelle operazioni di contrasto. Sfortunatamente, i social media contengono ancora oggi sezioni con contenuti per adulti, a cui non sembra così difficile accedere.

A questo punto bisogna anche premettere che, alla luce della recente causa intentata contro le piattaforme di Meta e il CEO Mark Zuckerberg dal procuratore generale del New Mexico Raúl Torrez, la scoperta fatta da un membro di ETA-W1nterSt0rm il 28 febbraio è stata estremamente significativa. Un membro di Anonymous, ex analista dell’intelligence militare, ha scoperto un collegamento in un gruppo Facebook pubblico su cui stavano indagando e contro cui stavano avviando un caso per la distribuzione di materiale pedopornografico. Ad un certo punto, le impostazioni sulla privacy del gruppo sono passate a private, ma non prima che i loro gruppi WhatsApp e Telegram venissero scoperti.

Non ci sono solo le accuse di Raul Torrez: un rapporto dei ricercatori del Wall Street Journal e dell’Università di Stanford e Massachusetts Amherst ha evidenziato come nell’universo Meta sia stata consentita la distribuzione di materiale pedopornografico, non riuscendo così a rilevare le reti dei predatori. L‘indagine del Wall Street Journal, ha evidenziato come l’algoritmo di Instagram suggerirebbe tra i “reels” video a carattere sessuale o pedopornografico e l’algoritmo lavorerebbe in modo tale che anche gli utenti minorenni, si siano ritrovati fra le proposte “reels” non adatti a loro.

Segnalare però determinati contenuti non è sufficiente, questo problema è stato evidenziato anche da ETA-W1nterSt0rm: una volta bannato, l’utente crea un nuovo account e continua a distribuire CSAM. Invece, questi utenti dovrebbero essere segnalati a piattaforme appropriate come NCMEC negli Stati Uniti e Child Exploitation and Online Protection (CEOP), che è un’unità della National Crime Agency nel Regno Unito.

O: “Ecco un’altra domanda interessante: puoi spiegare come avviene il processo di acquisizione e segnalazione del materiale CSAM e a chi viene inoltrata la relazione finale da parte dei membri di ETA-W1nterSt0rm?”

Ghost Exodus: “La prima regola pratica è che l’OPSEC è una religione. Questo sta per sicurezza operativa e denota lo zelo e la devozione che dobbiamo avere nel proteggere la nostra identità online. Anche se non posso entrare nei dettagli del processo di acquisizione delle prove senza aiutare il nemico con la conoscenza dei nostri metodi, posso descriverlo in senso generale.

Di solito tutto inizia con qualcuno che si imbatte accidentalmente in un collegamento a materiale pedopornografico su Facebook, il che la dice lunga sul colosso dei social media, poiché gli algoritmi non riconoscono questi contenuti illegali. Ecco perché Facebook viene denunciato. Un collegamento porta a un altro collegamento e a un altro ancora. È così evidente che Facebook sia utilizzato dai predatori di bambini.

Da questo momento, il mio team lavora sul lato dati di queste indagini utilizzando tecniche Open Source Intelligence (OSINT). Ciò viene fatto estraendo record WHOIS storici, utilizzando strumenti per scoprire indirizzi IP di backend, web scraper per estrarre qualsiasi metodo di pagamento, identificatori personali, ricerche inverse di immagini, database di rapporti di violazione e praticamente qualsiasi elemento di dati che potrebbe portare a un’identità. I metodi che utilizziamo dipendono dalle circostanze.

L’elemento più importante è la segnalazione. Tuttavia, mi fa arrabbiare quanto poco chiare siano molte piattaforme di segnalazione quando si tratta di procedimenti giudiziari perché, a mio parere, l’IWF utilizza un linguaggio vago che elude completamente le risposte relative alle indagini e ai procedimenti giudiziari basati sulle informazioni che ricevono dalle segnalazioni. Non rendono molto chiaro il loro scopo. Cercando molto su Google, ho appreso che si limitano a rimuovere il materiale CSAM. Ciò offre ai criminali dietro di esso un pass gratuito per creare nuovi siti e account. Questo non ha senso per me. Alle persone viene detto di andare da qualche altra parte per fare segnalazioni che apparentemente non soddisfano i loro criteri. La maggior parte delle persone non sa nemmeno dove andare e i casi legittimi vengono respinti.

Non è possibile contattare l’FBI perché ha completamente esternalizzato le denunce penali di CSAM all’NCMEC. Sebbene gli agenti dell’FBI siano incaricati di lavorare con loro per identificare queste persone e perseguirle, offrono poca rassicurazione alle persone che inviano rapporti sul fatto che i loro rapporti vengano esaminati, il che dà al pubblico l’impressione che i loro rapporti siano semplicemente gettati nella spazzatura. Inoltre, non è chiaro esattamente quale sia il ruolo dell’NCMEC nel ricevere segnalazioni senza dover effettuare ricerche approfondite per ottenere le risposte.

Ho scoperto che il Child Exploitation and Online Protection (CEOP) è molto chiaro in quello che fa. Sono un’unità di polizia della National Crime Agency. Hanno parlato con me, hanno chiesto le prove raccolte dalla mia squadra e hanno i collegamenti appropriati per collaborare con altre forze dell’ordine, cosa che i civili non hanno. Li ho visti consigliare un individuo e offrire empatia e supporto emotivo. Li consiglio vivamente”.

Prima di addentrarci nella vicenda è opportuno evidenziare anche il metodo di analisi e di indagine seguito dal gruppo. Anche se nessuna legge vieta l’uso di tecniche di raccolta OSINT, allo stesso tempo non viene mai incoraggiato alcun tipo di hacking illegale, come un attacco DDoS alla vittima o l’uso di mezzi illegali per ottenere informazioni.

Oltre a ciò, le operazioni ingannevoli finalizzate a “catturare” e “denunciare” un predatore sessuale non sono sempre facili e soprattutto, a volte, sono al limite della legalità. Soprattutto, per molti, vedere da vicino questo mondo crudele può essere davvero traumatico. GhostExodus, ad esempio, spiega: “Sono il tipo di persona che non riesce nemmeno a intravedere cose di questo tipo senza rimanerne traumatizzato, motivo per cui mi occupo esclusivamente di analisi dei dati”.

O: “Ora, partendo dalla tua affermazione dovremmo parlare di come è organizzato tutto all’interno del gruppo ETA-W1nterSt0rm nell’Operazione Child Safety. Hai detto che una persona è stata designata per offrire conferme visive. Un altro individuo è stato selezionato per eseguire l’OSINT su nomi utente e numeri di telefono, dimostrando così un gruppo attento e ben organizzato”.

Ghost Exodus: Sì, in realtà è abbastanza semplice. Gestiamo più team, il che aiuta ad alleggerire il carico di lavoro. Un team utilizza OSINT per rendere anonimi gli utenti dei siti Web CSAM, mentre un altro esegue l’analisi dei dati sui siti stessi, esaminando i record e vagliando il codice sorgente per estrarre informazioni utili. Un altro compila i dati e li inoltra a una piattaforma di reporting”.

Mentre i membri dell’ETA-W1nterSt0rm indagavano su un sito web che ospitava materiale pedopornografico, hanno scoperto un vasto network su Internet, che nascondeva un vero e proprio incubo. Tutto questo avviene dopo – come abbiamo evidenziato prima – il 28 febbraio 2024, quando un membro di Anonymous, ha scoperto un collegamento in un gruppo pubblico di Facebook su cui ETA-W1nterSt0rm stava indagando.

I primi passi per comprendere chi ospitava il target è stato ottenere i record WHOIS: in questo modo sono state trovate le prime informazioni su chi ospitava un determinato sito web o il proprietario e di seguito, utilizzando VirusTotal, sono stati scansionati passivamente gli host, mappando ogni relazione collegata al bersaglio, come informazioni sull’host, numeri ASN, WHOIS, certificati di dominio, sottodomini, mirror e, naturalmente, file o codici dannosi.

“È stato allora” evidenzia GhostExodus “ho scoperto un mirror gestito dallo stesso provider di hosting”. Da quel momento il gruppo ha iniziato a lavorare su due siti, invece che su uno. Il nome del dominio” spiega GhostExodue nel suo report pubblicato anche su CyberNews “apparteneva a un abbonato di Google Domains registrato in Germania, ma il server di hosting si trovava in Nevada, USA. Le informazioni dell’abbonato erano contrassegnate come private.

In questo caso, ha generato un ampio cluster di codice dannoso. Tuttavia, all’interno di uno di questi cluster era presente un indicatore IoC dannoso (Indicatore di compromesso) che puntava a un collegamento Onion TOR sul dark web. Indicava la possibilità che gli abbonati Hydra potessero accedere ai contenuti utilizzando un ponte dal dark web alla clearnet. Ciò potrebbe essere facilmente ottenuto utilizzando tor2web, che è un software proxy HTTP progettato proprio per questo.

Un IoC funge da bandiera rossa, che indica che potrebbe verificarsi qualcosa di sospetto su una rete o un endpoint. Può trattarsi di qualsiasi cosa, da attività strane come scansioni esterne a violazioni di dati. Qualsiasi prova digitale lasciata da un aggressore verrà rilevata da un IoC”.

Il 29 febbraio, entrambi i siti sono stati segnalati alla Internet Watch Foundation e al Centro nazionale per i bambini scomparsi e sfruttati.

O: “A questo punto bisogna spiegare perché è importante non sferrare attacchi informatici contro i siti indagati. Ti do la parola anche per raccontare cosa è successo il 2 marzo dopo aver segnalato i due siti”.

Ghost Exodus: “Questa è la verità: i siti Web DDoS indagati per aver ospitato media che raffigurano vittime di molestie su minori è folle, sconsiderato e la cosa più stupida che abbia mai visto. Non mi dispiace dirlo. Questo punto di vista non è condiviso solo da me, ma anche dai cacciatori professionisti che lavorano con l’FBI. Le persone che lo fanno devono essere licenziate e licenziate dal lavoro di volontariato di OpChildSafety, finché non imparano che questo non è il modo. Troppo spesso, gli hacktivisti eccessivamente ambiziosi vogliono dare l’impressione di essere rapidi, proattivi e abbastanza potenti da causare “risultati immediati”. Tuttavia, attaccare i siti sotto indagine interrompe di fatto qualsiasi ulteriore iniziativa investigativa e la costringe ad uno stop.

Non solo il sito web viene messo offline, ma gli operatori CSAM ricevono un giusto avvertimento che sono sotto indagine, chiudono le loro operazioni e si trasferiscono da qualche altra parte dove possono continuare a ospitare media che descrivono bambini molestati a scopo di lucro. Perdonami se utilizzo termini così vividi e concreti. Questo problema è così persistente e poche persone riconoscono “La Carta” a causa della mentalità di massa di Anonymous. Le persone che attaccano questi siti sono solitamente infantili, egoisti e idioti che si auto-promuovono e che non meritano di indossare la maschera. Stanno aiutando il nemico, anche se in mondo non intenzionale. Alcuni hanno il “cuore al posto giusto”, anche se le loro azioni sono fuorvianti. Ed infine, quando perdiamo l’accesso alle prove, lo stesso vale per le forze dell’ordine. Questo dà agli operatori CSAM e a tutti coloro che danno da mangiare al mostro un lasciapassare gratuito per continuare a commettere altri crimini contro l’innocenza dei bambini”.

Dopo i fatti del 2 marzo i dati sono stati ricostruiti. “Ancora più importante”, ha sottolineato GhostExodus, “dopo aver effettuato una ricerca inversa di immagini sul “modello” utilizzato dagli operatori come immagine di anteprima del sito Web, abbiamo appreso che la vittima in questione è presente su tutti i siti CSAM di Clearnet. Utilizzando lo stesso metodo, ho eseguito una ricerca inversa di immagini su uno screenshot della loro pagina di accesso utente e ho scoperto che uno dei mirror fuori produzione era stato memorizzato nella cache da Yandex, che puntava a una singola istantanea del sito su Archive.org. Quindi, cercando nomi di dominio su Google, sono stati scoperti oltre una dozzina di mirror, molti dei quali collegati a contenuti illeciti protetti da password condivisi su Mega e altri mirror inclusi collegamenti pubblicati su Facebook.

Uno dei ricercatori del gruppo ha così iniziato a scansionare e controllare i siti di Hydra, cercando visivamente il suo codice sorgente. Ecco la scoperta:

E’ stata poi utilizzata ChatGPT, per comprendere meglio la relazione tra le diverse sezioni del codice: “Ho usato ChatGPT per comprendere meglio la relazione tra le diverse sezioni del codice” ha affermato GhostExodus “e ho fatto cercare elementi investigativi utili, come informazioni di pagamento, e il modo in cui i contenuti erano ospitati. Questo è ottimo per generare riepiloghi di informazioni complesse perché si possono analizzare linguaggi di programmazione con cui si potrebbe non avere familiarità”.

Ma ChatGBT non è stato l’unico strumento di intelligenza artificiale utilizzato per le indagini. Quando uno dei ricercatori si è infiltrato nel gruppo WhatsApp di Hydra, ha ottenuto l’accesso ai numeri di telefono e alle immagini del profilo degli utenti. Anche in questo caso l’intelligenza artificiale si è rivelata utile: attraverso un’applicazione è stata rigenerata una delle foto appartenenti a un target di alto profilo che era stata tagliata a metà. Sfortunatamente però, utilizzando la ricerca inversa delle immagini, non è stata trovata alcuna corrispondenza.

Uno dei ricercatori ha scoperto che Hydra soffre di un’interessante vulnerabilità del server: un endpoint ha permesso al ricercatore di ottenere richieste GET dal server in tempo reale, esponendo un registro composto da timestamp, nomi utente, ID utente, quale livello ha acquistato l’utente, quanti utenti hanno invitato al sito e così via.

Questo ha permesso di scavare ancora di più nella rete, comprendendone la struttura criminale.

O: “Dopo tutte queste scoperte, questa non è la fine della storia: ogni giorno vengono trovati sempre più mirror. Quanti ne sono stati scoperti fino ad oggi e quante reti CSAM sono venute alla luce?”

Ghost Exodus: “Uno dei nostri analisti di dati ha scoperto circa 60 mirror gestiti da Hydra. Ogni giorno scopriamo più mirror”.

O: “Nella tua ricerca, hai evidenziato come Hydra poggia sulle spalle di un denominatore comune: l’industria tecnologica. Non è direttamente un promotore, penso, tuttavia, i criminali prosperano su varie piattaforme online e spesso non ci sono conseguenze. È giusto pensare di poter offrire maggiore trasparenza in questo senso? È sufficiente eliminare i contenuti?”

Ghost Exodus: “La semplice cancellazione di contenuti senza elementi di perseguimento penale è inutile. Il denominatore comune è che questa lotta contro i predatori è irrevocabilmente l’industria tecnologica. Meta è un esempio perfetto, ma soprattutto nel settore dei videogiochi. Non esistono misure di salvaguardia attuabili per rilevarlo, anche se la stessa tecnologia viene utilizzata per censurare la libertà di parola, segnalare fonti di notizie sospette, verificare i fatti che pubblichi e così via. La sicurezza dei bambini non è una componente del settore, anche se dicono che è vero il contrario. Le prove parlano da sole. Prendiamo ad esempio CloudFlare. I loro servizi non solo proteggono i criminali informatici, ma proteggono anche i pedofili in un numero così vasto che molte teste cadrebbero. Hydra utilizza i loro servizi ormai da oltre 2 anni. I loro servizi hanno fornito sicurezza e protezione per decine di nomi di dominio sconosciuti sulla rete in chiaro. Non c’è offuscamento, il loro materiale è completamente visibile al pubblico.

Perché la tutela dei giovani utenti è nata in secondo piano. Non è mai stata una priorità. Sappiamo che è così perché i contenuti CSAM continuano a proliferare sul web e non mostrano segni di rallentamento. Questo perché l’industria ha fornito l’infrastruttura, che non ha alcuna supervisione attuabile, nessuna guardia di guardia al cancello, checkpoint di sicurezza per impedire ai malintenzionati di utilizzare la loro infrastruttura e, soprattutto, nessuna tecnologia di rilevamento utilizzabile, come Facebook. Se parlassimo di molestatori di bambini con la stessa determinazione della guerra al terrorismo del governo degli Stati Uniti, non saremmo qui a fare questa intervista.

Se venissi sorpreso a gestire una truffa multimilionaria con uno schema Ponzi su CloudFlare, o a discutere di complotti terroristici, non si limiterebbero a cancellare il mio account. Collaborerebbero con le forze dell’ordine per assicurarsi che fossi consegnato alla giustizia. Tuttavia, quando le vittime sono bambini, anche l’IWF si limita a rimuovere i siti e a farla finita. Ci vorrà una legislazione per mettere l’industria tecnologica con le spalle al muro e per iniziare a rendere ciò una priorità assoluta”.

O: “Dopo alcune ricerche, ETA-W1nterSt0rm ha appreso che l’unità delle forze dell’ordine Child Exploitation and Online Protection (CEOP) lavorerà con il pubblico per indagare ed arrestare le persone dietro i crimini CSAM. Quali altri progressi speri che ci siano in questo senso?”

Ghost Exodus: “Gli unici progressi che spero vedremo saranno gli operatori dell’Hydra CSAM messi in prigione, insieme ai loro numerosi utenti, e i bambini salvati. Salvare i bambini è l’obiettivo finale”.

O: “Perché è importante per ETA-W1nterSt0rm mantenere una buona reputazione, e cosa significa per te insegnare l’hacking etico e consapevole?”

Ghost Exodus: “Le forze dell’ordine non collaborano con i cyber vigilantes, soprattutto con Anonymous. Ciò ha più a che fare con il modo in cui si comporta Anonymous, che non ha una reputazione positiva. Inoltre, le organizzazioni non collaboreranno con i cyber vigilantes. Ancor peggio, Anonymous attacca se stesso senza sosta, quindi cerchiamo di rimanere al di sopra di ogni rimprovero per poter continuare a lavorare senza ostacoli. Per quanto riguarda l’hacking, non utilizziamo tecniche di hacking. Utilizziamo risorse disponibili pubblicamente“.

Ti è piaciuto questo articolo? Ne stiamo discutendo nella nostra Community su LinkedIn, Facebook e Instagram. Seguici anche su Google News, per ricevere aggiornamenti quotidiani sulla sicurezza informatica o Scrivici se desideri segnalarci notizie, approfondimenti o contributi da pubblicare.

Cybercrime

CybercrimeLe autorità tedesche hanno recentemente lanciato un avviso riguardante una sofisticata campagna di phishing che prende di mira gli utenti di Signal in Germania e nel resto d’Europa. L’attacco si concentra su profili specifici, tra…

Innovazione

InnovazioneL’evoluzione dell’Intelligenza Artificiale ha superato una nuova, inquietante frontiera. Se fino a ieri parlavamo di algoritmi confinati dietro uno schermo, oggi ci troviamo di fronte al concetto di “Meatspace Layer”: un’infrastruttura dove le macchine non…

Cybercrime

CybercrimeNegli ultimi anni, la sicurezza delle reti ha affrontato minacce sempre più sofisticate, capaci di aggirare le difese tradizionali e di penetrare negli strati più profondi delle infrastrutture. Un’analisi recente ha portato alla luce uno…

Vulnerabilità

VulnerabilitàNegli ultimi tempi, la piattaforma di automazione n8n sta affrontando una serie crescente di bug di sicurezza. n8n è una piattaforma di automazione che trasforma task complessi in operazioni semplici e veloci. Con pochi click…

Innovazione

InnovazioneArticolo scritto con la collaborazione di Giovanni Pollola. Per anni, “IA a bordo dei satelliti” serviva soprattutto a “ripulire” i dati: meno rumore nelle immagini e nei dati acquisiti attraverso i vari payload multisensoriali, meno…