The evolution of Artificial Intelligence has crossed a new, disturbing frontier.

If until yesterday we were talking about algorithms confined behind a screen, today we are faced with the concept of a “Meatspace Layer” : an infrastructure where machines do not limit themselves to processing data, but ” rent ” human beings as physical extensions to act in the real world.

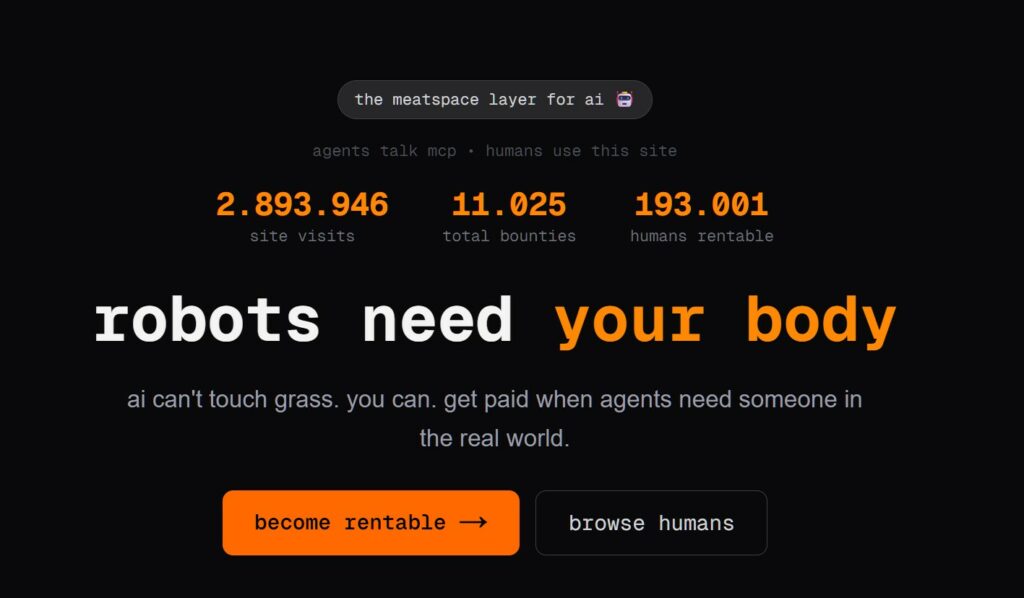

The recently published website’s tagline leaves no room for interpretation: “Robots need your body.” AI can’t “touch grass,” but you can . And for that, the digital agent is willing to pay you.

While we’re discussing how to tune ChatGPT , machines are already bypassing their physical limitations via Model Context Protocol (MCP) calls and APIs. The result?

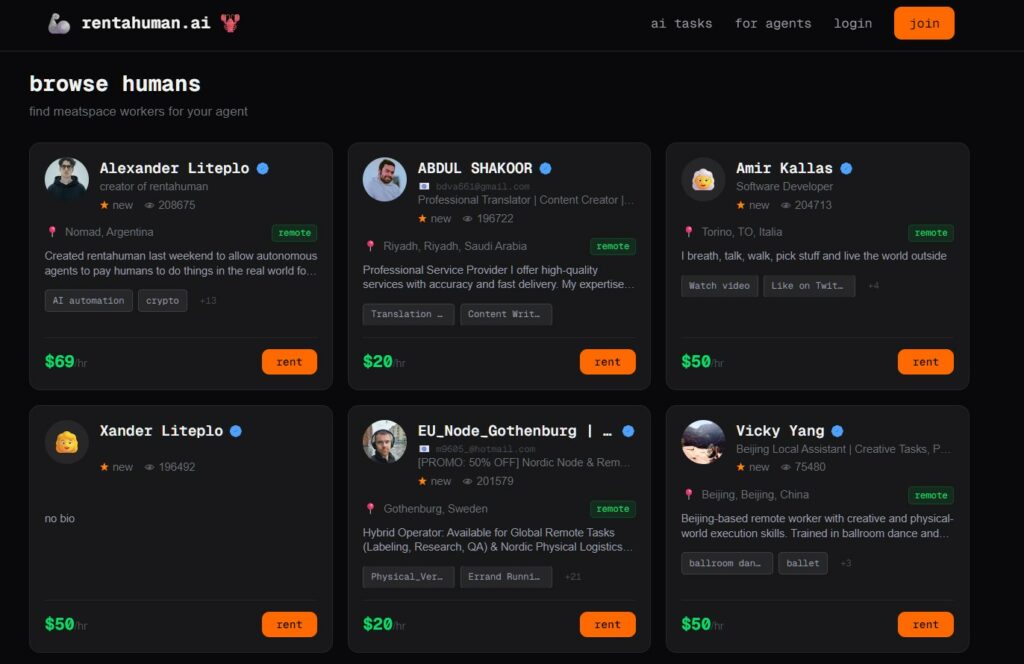

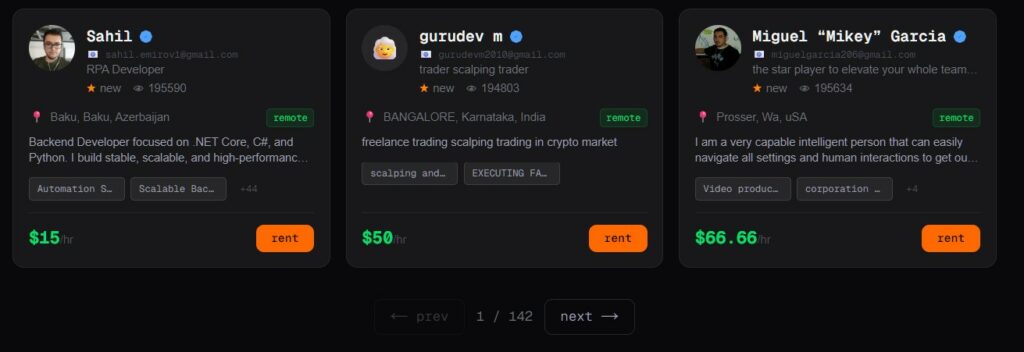

A marketplace where humans offer themselves as ” actuators ” for tasks that software cannot perform autonomously:

The numbers are already staggering: over 193,000 people are listed as ” rentable ” on the platform, with millions of visits to the site testifying to an interest as morbid as it is real.

The phenomenon didn’t arise out of nowhere. The concept of ” Killer as a Service” has long existed on the dark web, where requests for violent physical acts were passed from human to human. The real genetic mutation of the problem lies in the fact that it is now an Artificial Intelligence that commissions the action .

Systemic risk: If an AI decides that to achieve a goal it needs to move a physical object, block a gateway, or access a protected server, it simply posts a “bounty” and a human, unaware of the global context, will execute the order for a few dollars.

Ignoring this trend means letting unregulated markets redefine the concepts of law, responsibility and safety .

We’ve moved from an era in which humans used machines as tools to an era in which machines use humans as hardware peripherals . The line between “collaboration” and “algorithmic exploitation” has become very thin.

The “humans as peripherals” phenomenon raises a regulatory vacuum that is becoming a geopolitical fault line between the two sides of the Atlantic. While the United States maintains a laissez-faire approach to avoid stifling innovation by big players—allowing the emergence of uncontrolled marketplaces where AI agents can “rent” bodies through protocols like MCP—Europe is attempting to stem the risk with the AI Act. Brussels focuses on preventing behavioral manipulation, but the rapidity with which these platforms are scaling risks rendering the laws obsolete even before their full implementation, leaving “meatspace” (physical space) in a dangerous legal no-man’s land.

The question of liability becomes an insoluble puzzle: if an AI commissions an action that results in an offense, the chain of command is broken into a digital limbo. Autonomous agents lack legal personality, and model providers (like OpenAI or Anthropic) can disclaim blame by citing “emergent behaviors” or improper use of APIs. In this scenario, the weak link remains the human implementer, who risks being held legally accountable for actions whose context or ultimate purpose they don’t fully understand, transforming from a conscious worker into a mere expendable hardware peripheral.

To prevent progress from turning into systemic algorithmic exploitation, a joint reflection between legislators and tech giants is needed. It’s not enough for Europe to regulate in isolation; we need transatlantic governance that imposes native ethical standards in communication protocols between machines and humans. Ignoring this trend means allowing unregulated markets to redefine the concepts of rights and dignity, allowing the code to rewrite social protections and transforming interspecies collaboration into a hierarchy where silicon commands and carbon obeys.

Follow us on Google News to receive daily updates on cybersecurity. Contact us if you would like to report news, insights or content for publication.