The recent discovery of a vulnerability in ServiceNow’s AI platform has shaken the cybersecurity industry.

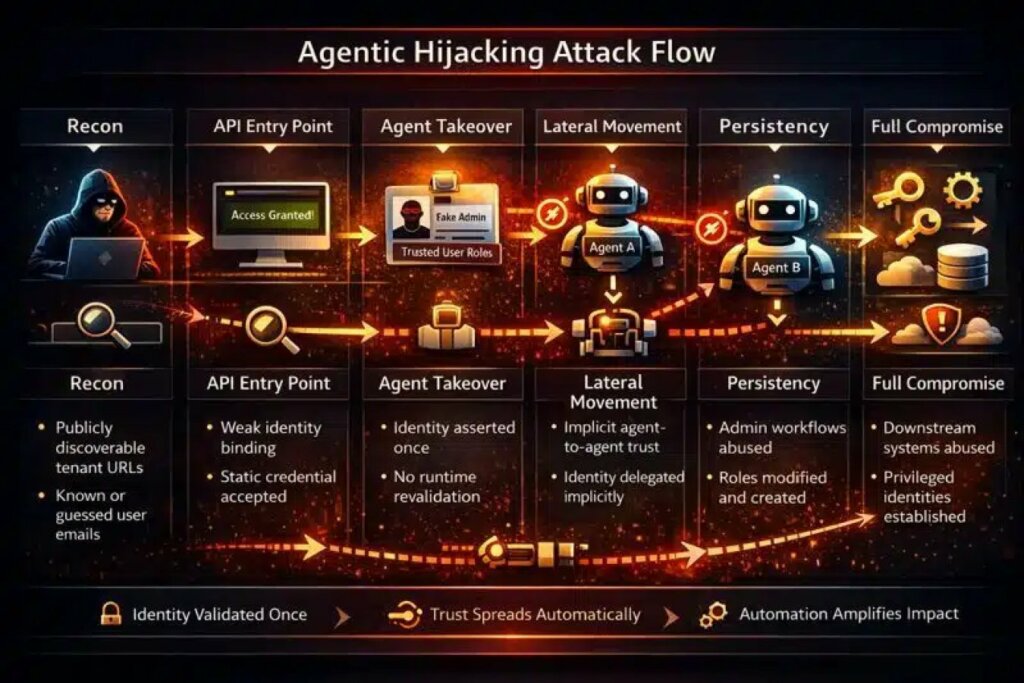

This flaw, characterized by an extremely high severity score, allowed unauthenticated attackers to impersonate any corporate user. To launch the attack, all they needed was a simple email address and a predefined static credential within the system.

Once impersonation was achieved, standard protections such as multi-factor authentication and single sign-on were completely bypassed, leaving the door open for malicious operations.

The most alarming aspect is the attackers’ ability to manipulate AI agents to perform actions with administrative privileges. By abusing workflows, they were able to create backdoor accounts and steal sensitive data on a large scale without activating traditional monitoring systems.

This scenario highlights an uncomfortable truth: traditional perimeter defenses are no longer sufficient in the age of autonomous AI.

The core of the problem lies in how identity is managed across the platform’s various components. At ServiceNow, once an identity was initially validated, trust propagated indefinitely across agents and workflows without further checks. Attackers exploited this gap to “remote control” the organization’s artificial intelligence.

Using the Virtual Agent API, cybercriminals could invoke automations designed to streamline business processes, turning them into weaponized tools. An AI agent with excessive permissions could, for example, create new records in arbitrary database tables, assign high-level roles, and reset passwords, all while masquerading as a legitimate administrator.

The danger doesn’t end within ServiceNow’s confines. Since this platform often serves as a control plane for other enterprise systems, the initial compromise opened the door to lateral movement into related infrastructures. Attackers could move to other critical applications that blindly trust authorizations from ServiceNow.

This vulnerability demonstrated that systemic risk stems not from a single code error, but from a flaw in the design of interactions between agents. AI’s ability to autonomously chain actions amplified bugs that, in traditional contexts, would have had limited impact, transforming them into a complete compromise of the platform.

The incident highlights the need for complete visibility into what AI agents can do and what data they can access. Organizations must implement rigorous authorization controls that go beyond simple initial authentication. Managing non-human identities is becoming the new battleground for cyber defense.

It’s essential for companies to adopt least-privilege security models, even for automated entities. As Silverfort research highlights, the fragmentation of identities across different tools creates blind spots that attackers can exploit with extreme precision.

Follow us on Google News to receive daily updates on cybersecurity. Contact us if you would like to report news, insights or content for publication.