Alessio Stefan : 15 May 2025 20:42

In recent years, political attention has expanded beyond the national borders of different states. Since the now outdated pandemic caused by COVID-19 and the (re)emergence of different conflicts in different parts of the world have expanded public information by bringing statements and decisions to the table at the supranational level.

The European Union has received a lot of media coverage in recent years think of the AI ACT and all the discussions brought on the topic of artificial intelligence and the GDPR in the area of data protection. Outside of the measures designated to the digital industry, the ReArm Europe initiative [1] has been very much heard among both those in favor and opponents of this project.

This scenario can only be seen as extremely positive where the various discussions are put on a multi-jurisdictional footing on decisions that otherwise, if addressed independently by individual states, would be complex and poorly adhered to a common path.

This article aims to deal mainly with 2 stances of the European Commission (with an initial digression to Italy) by going on to discuss their merits. The entire content adheres (as far as possible) to the principle of charity without wishing to assume some sort of malice in the institutions that will be mentioned.

All proposed situations will be discussed as a risk (and if implemented, threat) to the right of privacy and (pseudo)anonymity in the digital sphere and how the counterweight has been handled in relation to the safety or protection of certain categories.

Recall that privacy is considered a fundamental right in Article 12 of the Universal Declaration of Human Rights [2] and Article 17 International Covenant on Civil and Political Rights (1966) [3] and it is expected that this right will be protected by those countries (Italy and Europe included) that make democracy their political pivot.

Obviously it is up to the individual to decide what value to place on that right, but when an institution unilaterally forces measures that jeopardize respect for it, it is at the very least necessary to bring attention to the issue.

AgCom issued, on April 18, 2025, a press release regarding the guidelines on age verification of users of “video sharing platforms and websites” [4] to which providers will have to comply within 6 months of the publication of the resolution. Sticking to the published statements, the age verification system will be based on 2 “logically distinct” steps with 3 main actors : user, identity providers and an independent third party.

The user must identify him/herself to the third party entity that will validate his/her date of birth (eg:/ define whether user is a minor or not) after which to initiate a session on the service the provider must request validation from the third party entity.

None of these “certified independent third parties” are mentioned, nor even how the verification process is constituted, but only the criteria that will be considered including:

This process is described by AGCOM as a “double-anonymity” mechanism, but we would describe this statement as very misleading. If the third party entity must have my basic identity anonymity is lost despite the fact that the service provider has no direct knowledge other than the user’s age. To be more precise, the validation process is the opposite of a “double-anonymity” mechanism that actually goes to add artifacts for tracking users. this principle must be crystal clear and explained to users (which AGCOM is committed to protect) in no uncertain terms.

To be nitpicky, no definition was even given of what is considered a risk or danger to minors (we were limited to the equation viewing pornographic content = harm), one could easily argue that a model posting photos in lingerie linking to her OnlyFans profile in the description is more harmful to underage individuals than a video with sex acts found on PornHub.

Despite the relatively short deadline by which service providers will have to comply in order to operate within Italian borders, it is still unclear how user identification (eg:/ SPID, photo ID card) is to take place. This lack of clarity is quite perplexing given a public consultation on the matter that began in 2024 [5] but it can be presumed that this is a communication gap on the current communiqué and that we will have to wait for the publication of the resolution for more information on the matter.

As is now well known these guidelines have a specific purpose namely “to ensure effective protection of minors from the dangers of the web” not coincidentally this is an extension of Law 159 of November 13, 2023 (“Caivano Decree”) to realities such as betting and gambling sites by placing the providers themselves as responsible for verifying the age of their users.

No one wants to (and should) claim that viewing pornography is not a risk (obviously with the right degree of severity) particularly for underage users. There are several studies on the subject such as Problematic Pornography Use: Legal and Health Policy Considerations [6] which goes on to analyze how viewing online pornography is fertile ground for the development of PPU (Problematic Pornography Use) and how it does not matter how much material is viewed but under what condition of brain development it occurs and in what manner. The study entitled Pornography Consumption and Cognitive-Affective Distress researched the different distortions caused by excessive use of pornographic material leading to different relational problems and the creation of future mental dysfunction.

It is precisely because we are well aware of the risk that we can afford to say that such guidelines are not at all effective at the problem they are intended to solve. The ease of deception to this type of measure is now an obviousness that is, however, present and should not be ignored; to consider a measure “effective” it must, at the very least, be robust to the various tools of deception. Otherwise, all you get is a solution that is not in step with the times leading to nothing but wasted time and resources to public institutions.

But excluding this nuance from the discourse let’s assume that AGCOM‘s idea succeeds in its intent, the result would not be a lower percentage of minors using red light material but rather the removal of these kinds of users from “lawful” channels offering this kind of material. Platforms such as PornHub, XVIDEOS, YouPorn and the like were immediately put under the spotlight as soon as the age verification was announced. Let’s remember that such sites have controls and codes of conduct in publishing content going to punish and report potential illicit disclosures.

These platforms (with now millions of annual users) seek to limit revenge porn or nonconsensual disclosure by maintaining a sex-positive and primarily playful environment for its users where, despite the huge variety of content, it will never be fertile ground for violence, abuse or similar content.

There are much more dangerous realities (which should be given priority) that have no interest in complying with any regulations or bypassing them by exploiting platforms that are in themselves neutral (see Telegram). We are talking about channels/groups that can be safely found on Telegram, alternative sites with fewer restrictions on uploaded content, or any other place that incentivizes the exchange of material between users. These environments need to be countered not only with rules and regulations but with real field operations that need to be continuous and supported by the right resources. Going to force access through age verification in the ways proposed by AGCOM risks a heavy shift of minors to platforms with less control over content. In addition “extreme” or not so “extreme” material can easily be found on Google Images [7], would the solution be to require age verification for certain queries on a search engine? X/Twitter quietly allows nude photos and videos, should this platform also be subject to such a measure?

In an ideal world no one would want minors (especially those <14 years old) to come into contact with porn material (without the proper preparation) but given the times the possibility of this scenario must be contemplated as a price for easy access to the Internet by all. Since this statistic cannot be zeroed out (in part because of a sexualization present in socia media, which legally people between the ages of 13 and 17 can access without restriction) it must be made to mitigate access to harmful environments that can directly influence the behavior of this portion of web users.

Without the aid of half-measures, we must accept that if a minor wants to access pornographic content, he or she will do so at the expense of imposed barriers. Likewise, we must understand that pornography offered as a playful experience can certainly be the source of various problems on the person but the harms are extremely minor than other methods of sharing material.

The risk of having an increased audience in environments where revenge porn is cleared through customs, the exchange of material between different users incensed, and absent control of this type of content is relatively greater than listless use on traditional porn sites, and this measure will only lead to increased attendance in very opaque areas. Not only is it not an effective measure as planned by AGCOM, but it may even make the situation worse by having less monitoring, and therefore less control, over the phenomenon.

Having this basis we can continue with the next point namely on proportionality. To what extent can we modify others’ freedoms for the protection of a subset of online users?

Just asking this question can make a person appear as insensitive in the eyes of others when in fact it is a sign of pragmatism combined with cost-benefit balancing. It is necessary to analyze the problem coldly without lapsing into a simple “what about the children?” creating a “plot armor” without a real argument.

First, one can easily trace back to various policy attempts in bringing similar measures outside pornographic or gambling sites as well (we discussed this in a previous article [8]) challenging the principle of charity with which we began the article. The reasons brought are among the most varied but always fall under the protection of minors or countering the abuse of anonymity by people performing hate speech on various social channels.

Any state that proposes this type of regulation sets a precedent that threatens the free use of the Internet by expanding the ability to file and monitor even to government institutions making them de facto part within the threat model of those who decide to value their right to privacy and/or anonymity. An European country should protect this right and not be its enemy. Taking away even part of this right will not solve the problem of hate speech or racism but will diminish free access for certain individuals to interact with part of the network.

Returning to pornography, the discourse does not deviate much, and we should not find a situation in which someone does not want to let a third party entity know about his or her more or less assiduous frequentation of adult sites, if an individual wants to visit certain sites without letting some entity or person know about it his or her wishes must be respected.

To be as blunt as possible, respect for this will cannot in fact be denigrated by negligence on the part of those who should really be protecting minors. Let us not forget that the direct responsibility in protecting minors to content that is not suitable for them (eg:/ pornography and gambling) lies with their parent/guardian. If the problem really lies in minors’ continued frequentation of such content, we must ask ourselves how uncontrolled use of the Internet is possible.

Taking away people with an advanced age (>60 years old) by now anyone is aware of the ease of finding any video or image with a simple search engine. But at the same time it is also now true that all devices can be easily configured to implement parental control measures that can prevent the viewing of certain content (or at most notify the parent of inappropiate content viewed on the child’s device).

In light of the obvious, why does it seem outrageous to give proper responsibility to those who are not responsible? In addition, why should inadequate preparedness (or total/partial disinterest) on the part of guardians be resolved by a measure such as that proposed by AGCOM?

There is no proportionality in this. A state should not adhere to the responsibilities of a guardian by going out and restricting everyone’s freedoms indiscriminately, especially when it comes to privacy/anonymity in the internet sphere. The mere fact that an ID is required in order to access any online resource is a limitation (for those who do not have it, do not want to surrender it, or have their access removed according to the rules imposed by AGCOM) and as such should be weighed very carefully and, most importantly, properly justified before implementing it.

As mentioned at the beginning of the section, one is aware of the risks on the use of pornography by minors, and precisely in light of this one demands that truly effective solutions be found by going to study the problem analytically as to why and how such content is so freely accessible to the youngest. It is precisely for this reason that it should be demanded that parents be given adequate responsibility and in case explain to them how to implement in a practical way the protection that is demanded for this type of user.

Is this a 100% “effective” solution? Absolutely not. Is it better than measures that go to restrict the rights of others? We leave that answer to the reader.

It leaves one very puzzled by the kind of communication about these kinds of regulations. They are sold as the final solution for solving problems that can be solved in a (at most) cursory manner with only the support of creating laws. In parallel, an attempt is being made to (re)clear the argument “if you have nothing to hide you shouldn’t worry”.

Getting back on the technical side, there are several aspects of the AGCOM notice that should at the very least be clarified if (indeed) an age verification mechanism such as the one proposed is to be implemented.

Before leaving this “hot” section, we would like to clarify that no one wants to condemn parents/guardians of minors who have come into contact with pornographic material online, far from it. Having such a role in a digitized world has its challenges that should not be underestimated but at the same time should not be delegated to other parties. Unfortunately, falling back on the usual commonplaces without having a proactive approach that tends to at least mitigate or make difficult specific behaviors (both suffered and enacted) on the Internet (eg:/ viewing pornographic material, hate speech, bullying) turns out to be the easiest but not the most effective way. It is understandable how some of the online user base would find such a measure plausible, but we ask them to inquire what repercussions such measures might have on a broader level.

Since we do not have adequate preparation, we are open to hosting (in any form) experts in the field who can propose different measures of the problem and above all to give it the right magnitude without arousing unwarranted panic and that do not limit fundamental rights on which the social system we are part of is based.

After this brief zoom on the beautiful country we can move to the macro level, what is happening in the European Union? As mentioned earlier there has often been talk about GDPR and AI Act trying to regulate different realities in the digital sphere to protect the citizens of this huge community (and economic market). As mentioned in the introduction, much of the attention of citizens belonging to the 27 EU members has been the ReArm Europe (or Readiness 2030) project, a project that aims to increase the union’s military spending motivated mainly by the Russian-Ukrainian conflict that is now more than 3 years old.

Outside of external threats, the EU is developing several measures for internal protection from various criminal realities of relevance according to the EU.

After several instances and parliamentary discussions, the European commission announced an ad hoc road-map to increase the protection, mitigation and countering of insider threats on different levels. this project was named “ProtectEU – the European Internal Security Strategy” [10] with its first public appearance through a document published on April 1, 2025.

After reading the document, the attached FAQs [11] and statements by Henna Virkkunen (Executive Vice-President for Tech Sovereignty, Security and Democracy) on the subject [12] we have several concerns to show our audience regarding encryption and law enforcement access to encrypted information.

Let’s start with what specifically puzzled us. Within the document (source 10) we have several statements about the goals in this regard including:

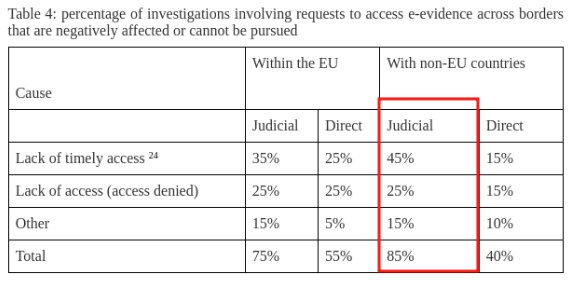

The message is quite clear, the EU wants to de-facto implement a backdoor to the various technologies that offer cryptographic channels to their users with a promise to keep citizens’ security intact. To begin, let’s start with point 3 in the list above about that 85% also mentioned by Henna Virkkunen herself. It was not well explained how that percentage was arrived at, but the source cited in the paper points to a further document (2019) titled “Recommendation for a COUNCIL DECISION authorizing the opening of negotiations in view of an agreement between the European Union and the United States of America on cross-border access to electronic evidence for judicial cooperation in criminal matters” [13] where the figure is simply presented but with additional detail.

More than half of all criminal investigations today require access to cross-border electronic evidence. Electronic evidence is needed in around 85% of criminal investigations, and in two-thirds of these investigations there is a need to obtain evidence from online service providers based in another jurisdiction.

Doing brief research one can find a “Working Document” dated 2018 again regarding digital evidence, the figure is based on requests for access to non-EU states, this makes the statement “[…] 85% of investigations require access to digital evidence” very misleading and not very “honest” from an institution like the European commission. More in detail it specifically concerns judicial (“judicial”) requests where 25% are actually denied requests and 45% a lack of confirmation in a timely manner.

This is not mere nitpicking but a request to offer the information correctly in order to avoid “forcing” the data to justify any measure. The same document shows 85% of investigations that need (relevantly) access to digital evidence but only cross-border (out-of-boundary) investigations where in 65% of cases a request to access data is needed.

It is therefore inexplicable of the statements of Henna Virkkunen and whoever drafted the FAQ regarding ProtectEU.

Moreover, these numbers are inflated by 3 EU states forming 75% of the total requests : UK, France and Germany. In addition, 70% of the total requests were sent to Google and Meta (Facebook at the time).

In the cited document one can find the different tables for each state in such a way that one can get an idea of the “raw” numbers, the only flaw is that the requests within and outside EU borders or individual states are not diversified. In addition, one would need to delve into how the requests were conducted and/or whether there was adequate evidence to motivate access to the data, but even assuming these requests are 100% motivated the claims remain misleading.

Beyond the statements and the different percentages shown let’s try to reason about the core of the argument: access by law enforcement to encrypted data. Whether they are communications, data backups or metadata (very underestimated, not everyone is clear on the concept of “We kill based on metadata” [14]), the EU is planning to give unencrypted access taking into account legislative and security aspects.

The basis of the various arguments against this measure are basically based on 2 precise aspects :

The EU definitely needs to engage with the cybersecurity community in Europe in order to fully understand why this measure is not a good idea. Encryption is non-negotiable, 39 organizations and 43 experts have published an open letter to the European Commission about it [16] and we hope it will be properly listened to if people’s security is really to be valued.

Assuming the principle of charity in this case as well, governments change and with them the use of the different tools present before. As Russia has changed[17], as the U.S. is changing [18][19] Europe may also change, and we cannot allow any government or political body in such scenarios to have the ability to break citizens’ encryption. We have to be very careful about bad intentions but even more so about those to such measures presented with the best of intentions.

Obviously, the most common (legitimate) question will be: what is the alternative to counter those who carry out crimes with the support of cryptography?

To answer, within the limits of our knowledge, it is important to underline that there are no silver bullets (no, not even a government backdoor can stop several abuses perpetuated online), this implicitly requires combined efforts between different specializations and a strengthening of law enforcement in different aspects (fortunately, ProtectEU provides for the strengthening of Europol).

Obviously, the above proposals (which are not the only nor the best) require resources and training from all the actors involved and one could argue that a “legal” backdoor is more economical and “easier” to use. There is no solution to this and the only thing that can be countered is that security, privacy and freedom have a cost that must be borne (without shortcuts) by the institutions that must protect all 3 of these aspects of society.

For those who have sufficient knowledge of the choices in the EU on the subject of cryptography, it will certainly be remembered the bill labeled “Chat Control” [23] which gave not only access to encrypted communications but allowed them to be scanned. The main motivation brought to the table of the commission was the protection of minors online by increasing the ability to detect child sexual abuse material. Many privacy protection organizations have expressed their strong opposition to the proposal [24][25] (to be honest, a significant number of EU parliamentarians have also done the same [26]),in the end the proposal did not receive a majority, resulting in nothing.

This specific part of ProtectEU is basically the same proposal but with a different guise by bringing the focus on crime rather than on preventing disclosure of CSAM material. Such behavior puts a strain on the principle of charity with which we introduced the article, the counter-arguments to Chat Control do not differ much from those of ProtectEU and it is therefore difficult to understand why a high-level institution can fall into the same mistake twice.

The European Commission is continuing to try to gain access to encrypted data despite the various discussions on the subject, in the future it will not be surprising that there will be yet another attempt to break encryption within the EU territory.

Let’s stay in the EU but this time let’s leave E2E cryptography aside and let cryptocurrencies enter the discussion, in particular those strongly oriented towards anonymity such as ZCash and, the best known, Monero. That decision originates from Regulation 2024/1624 [26].

The EU has decided that from 1 July 2027 [27] an Anti-Money Laundering Regulation will be drawn up at European level (Anti-Money Laundering Regulation) where among the focal points we have:

To the detriment of the other points, we will focus on point (3) here. Obviously, the problem we want to try to address is that of illicit markets (eg:/ drugs, extortion, sale of illicit goods) that rely on these cryptocurrencies to hide transactions and make any investigations difficult. Not only will the purchase of these cryptocurrencies be discouraged, but also mixers and other anonymity tools that fall under DeFi (Decentralized Finance).

It is important to emphasize that the private custody of currencies such as Monero will not be made illegal but a paradox is created. In order to be able to perform transactions explained above, it is necessary to show one’s identity, making the use of this cryptocurrency useless. In short, the basis of the regulation is to make every single blockchain transaction within the EU borders as traceable as possible.

We apologize to the reader if we do not go into the technical specifications that allow you to mitigate the traceability of Monero, Dash or ZCash transactions. To keep the focus of the article, we ask those who do not have enough knowledge about it, to focus on the fact itself.

One of the most visionary papers in the field of computer security was written by Adam Young and Moti Yung called “Cryptovirology – Extortion-Based Security Threats and Countermeasures” (1996) [28] which proposed several analyses on how cryptography could create damage as well as anonymity and/or privacy. In this paper we have several predictions such as extortion through cryptography (ransomware) and the use of cryptocurrencies to be able to monetize.

The concept of Young and Yung has become a tangible reality, to date the world of ransomware and dark-markets are real phenomena characterized by a strong persistence motivated by the large sums circulating within them. The word “persistence” must be the pivot of the discourse, the criminal world is born under certain more or less incentive requirements but in the same way it acts with foresight and preparation.

Visto che sono stati citati diverse volte sia il mondo ransomware che quello dei dark-markets come fattori rilevanti alla necessità di queste regolamentazioni (sia da politici che dalla opinione pubblica), possiamo portare la nostra attenzione qui prima di muoverci sull’impatto che questo tipo di regolamentazione potrà avere sugli utenti.

Cryptocurrencies are a payment option, not the cause of the different crimes involved, and even imagining that “cutting” (part of) these options solves the problem is a symptom of a dangerously naïve and simplistic way of thinking. In the case of the ransomware world, a pro-active approach to IT security (both macro and local) is the real solution. In the dark markets sector, a strengthening of law enforcement, an expansion of collaborations between different states, continuous training and the development of various tracking tools is the key to being able to counter this phenomenon.

Do we want to combat such crimes? Let’s focus on the causes and not offer threats the chance to make the hypothesis of “dark stablecoins” [29] a reality, where there will be less reach by enhancing the capabilities of these actors. Once again, good intentions can lead to irreversible and self-sabotaging consequences.

THE STUDY “Cryptocurrencies and drugs: Analysis of cryptocurrency use on darknet markets in the EU and neighbouring countries” (2023) [30] carried out by EUDA (European Union Drugs Agency) showed how, among the nations involved, the ban on cryptocurrencies has not solved the activities of dark markets in any way.

Eight of the 54 countries (~15 %) in the sample have outright bans on cryptocurrencies, yet

engagement with DNM continues in these locations, particularly among those in the EU’s

Southern Neighbourhood.

Alternative payment methods already exist and are being used in these environments for money laundering for both RaaS groups and the dark markets world. In addition, it is extremely difficult to perform a real ban on any available crypto, peer2peer exchangers exist and continue to be used, making it difficult to implement KYC processes. In short, even if it were the right path, it is too late to ban even some of this technology.

Alternatives will certainly arise (eg:/ illicit exchangers, vouchers, gift cards, purchase of assets controlled by threats, cash by mail) and we will have new symptoms which will make it more difficult to counter and where bans will certainly not be so easy to implement (in a democratic way). Again, selling these solutions like silver to defeat Dracula is very dangerous, you have to focus on the causes not the symptoms.

Privacy and anonymity also pass through the finances and purchases that an individual wants to make online, such a process can be done in different ways not only with cryptocurrencies. Mullvad [31] himself advises its customers to pay in cash by post remaining anonymous as much as possible, if we want to give 100% traceability to mitigate illicit finances then should we ban cash?

DeFi has its value in the world of privacy/anonymity and as such should not be banned a priori, the founder of Ethereum admitted to having used the Tornado Cash mixer [32] to make donations to Ukraine. This example is a focal point on how, even if you are located in a favorable state, the right to privacy must be preserved without discrimination.

Wanting to donate to Ukraine is a great example of a valid need for financial privacy: even if the government where you live is in full support, you might not want Russian government to have full details of your actions.

— Jeff Coleman | Jeff.eth (@technocrypto) August 9, 2022

The impact that this decision will have on this type of user may prove irreversible, once again the EU shows obtuseness in the fight against crime. This inability to find new solutions and to avoid simple manoeuvres for complex phenomena is a serious sign of the direction taken by the commission and of those who represent it. An environment must be re-established where every decision must be (1) properly weighed, (2) presented correctly, and (3) based on study and empirical eye, leaving aside misleading assertions.

The EU’s low R&D investment compared to the rest of the world [33][34][35] (brought by various causes that we will not cover here) have made the internal environment at the borders unsuitable for modern challenges (not only in the security field but also in the economic and military spheres). The need to invest in research, strengthen law enforcement and select effective approaches is felt more than ever and is leading to risky political choices for which we could pay the price in the not too distant future.

Outside of this, we want to underline how these proposals are presented by leveraging sensitive topics (protection of minors, criminal use of different technologies, protection of online users from various abuses) exploiting the so-called weaponized empathy [36]. This approach is a challenge (among many) for democratic circles and does not have the right importance in public opinion. Through the use of hemapathy/emotions/sensitivity you can convince an audience of things [37] false or lead them to support decisions that are not suitable for the problems posed.

Public opinion is certainly divided when it comes to proposals on the inclusion of digital identities for social media or the internet in general, which is legitimate. What is surprising is the lack of pragmatic arguments on the pros and cons of who would like such a proposal to be activated. No, to say that if you have nothing to hide is not an argument. To say that AGCOM’s choice does not even protect minors. Nor is it to assert that currencies like Monero are only used by criminals and that they should therefore be banned.

Everyone is responsible for choosing whether to maintain their online privacy (and to what extent) but no one should be able to afford to limit that freedom. If sensitivity applies to one bank, it must also be justified by the other in the same way.

There is often talk of introducing some form of sentimental education within schools in order to avoid unpleasant experiences that have occurred within different communities. Regardless of how one thinks about the subject, would it be interesting to present such a proposal also as a measure (also) to be able to “empower” people and make them more resilient when exposed to different signals (including weaponized empathy)? When these proposals are announced, there is a climate based for the most part on emotionality and on common-sense statements (“minors must be protected online”) without demonstrations of the effectiveness so much required.

There is often talk of introducing some form of sentimental education within schools in order to avoid unpleasant experiences that have occurred within different communities. Regardless of how one thinks about the subject, would it be interesting to present such a proposal also as a measure (also) to be able to “empower” people and make them more resilient when exposed to different signals (including weaponized empathy)? When these proposals are announced, there is a climate based for the most part on emotionality and on common-sense statements (“minors must be protected online”) without demonstrations of the effectiveness so much required.

Working on this aspect will be helpful not only in these cases but also for phenomena such as misinformation or scams that leverage the sensitivity of others. There are threats online and if you decide to access them, you need to do it with the right preparation and knowledge.

Security is also achieved through resilience. To gain resilience, you need to get out of comfort zones and get in touch with the reality of the facts without sugarcoating it, and this requires re-evaluating some positions that were previously considered “sacred”.